This is a general talk so here’s some players for our 20th century journey if you haven't met them.

We all use Linux even if we don't know it. When you see a machine reboot at a grocery store it's like “Oh hey there's the penguin again”. Linux has arguably become the most ubiquitous operating system.

How did that happen?

The story usually given is an undergrad posted about his hobby project and a bunch of people helped out.

Wait, a college kid with a hobby project started a computer revolution by posting?

That's absurd!

What about these other things?

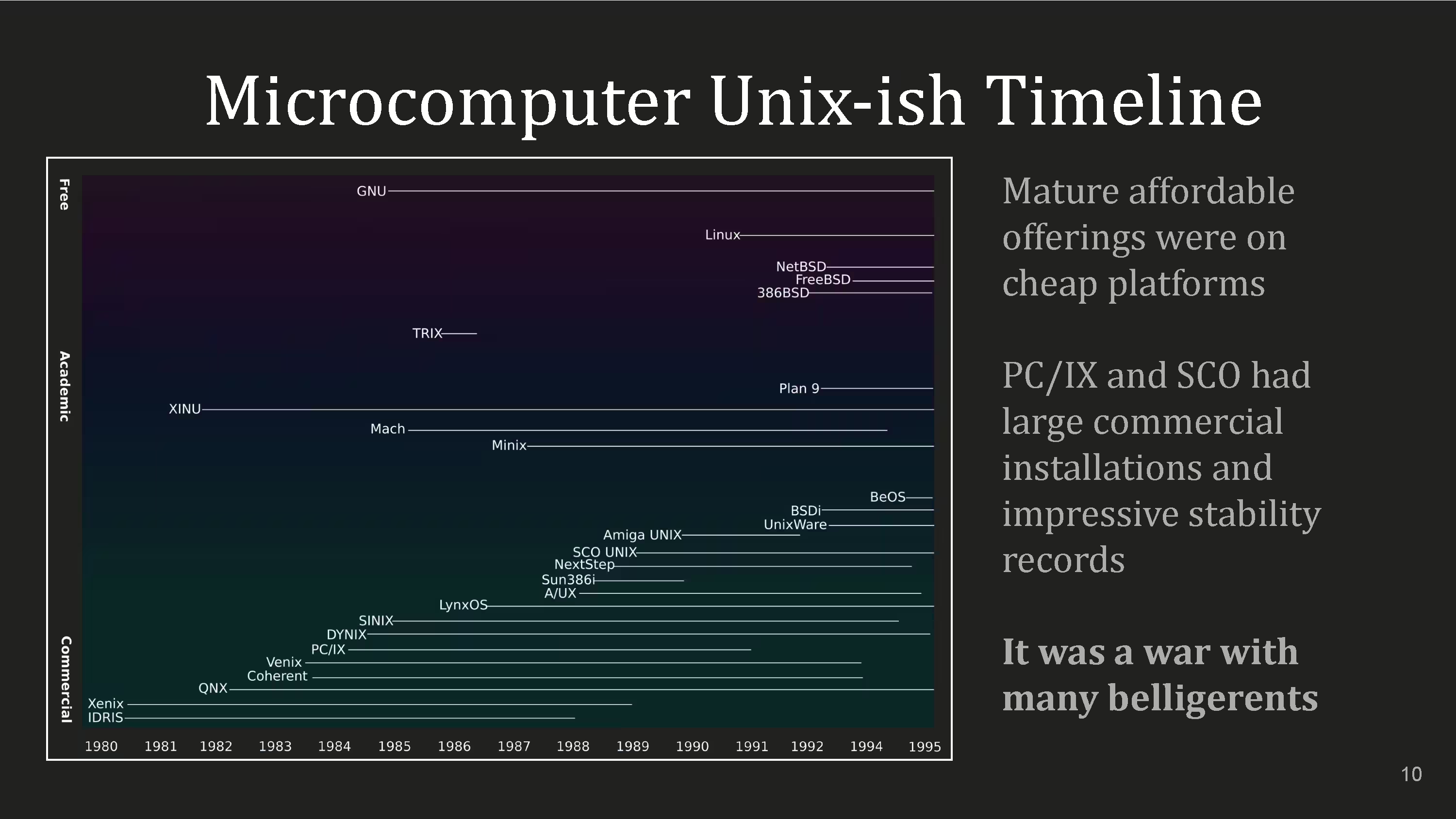

They’re only the Unixish ones. Oberon by Pascal’s Niklaus Wirth is from 1987 and there were many others that didn’t make the deck.

But these did. Here’s various commercial efforts. Some of these are complete systems, others are kernels. The lines are between the first and last release.

Linus wasn’t shouting out from the wilderness. There was a vibrant PC UNIX ecosystem that predated him. Products such as Xenix and Coherent were hot stuff at one time but now are the works of Ozymandias.

Look on thee timeline, ye Mighty, and despair!

That’s because this guy Genghis Khaned the UNIX war.

There's only one commercial UNIX without hardware attached to it still around, Xinuos Unixware 7 definitive 2018, which last release was actually in 2017, and that's it, it's deader than dialup.

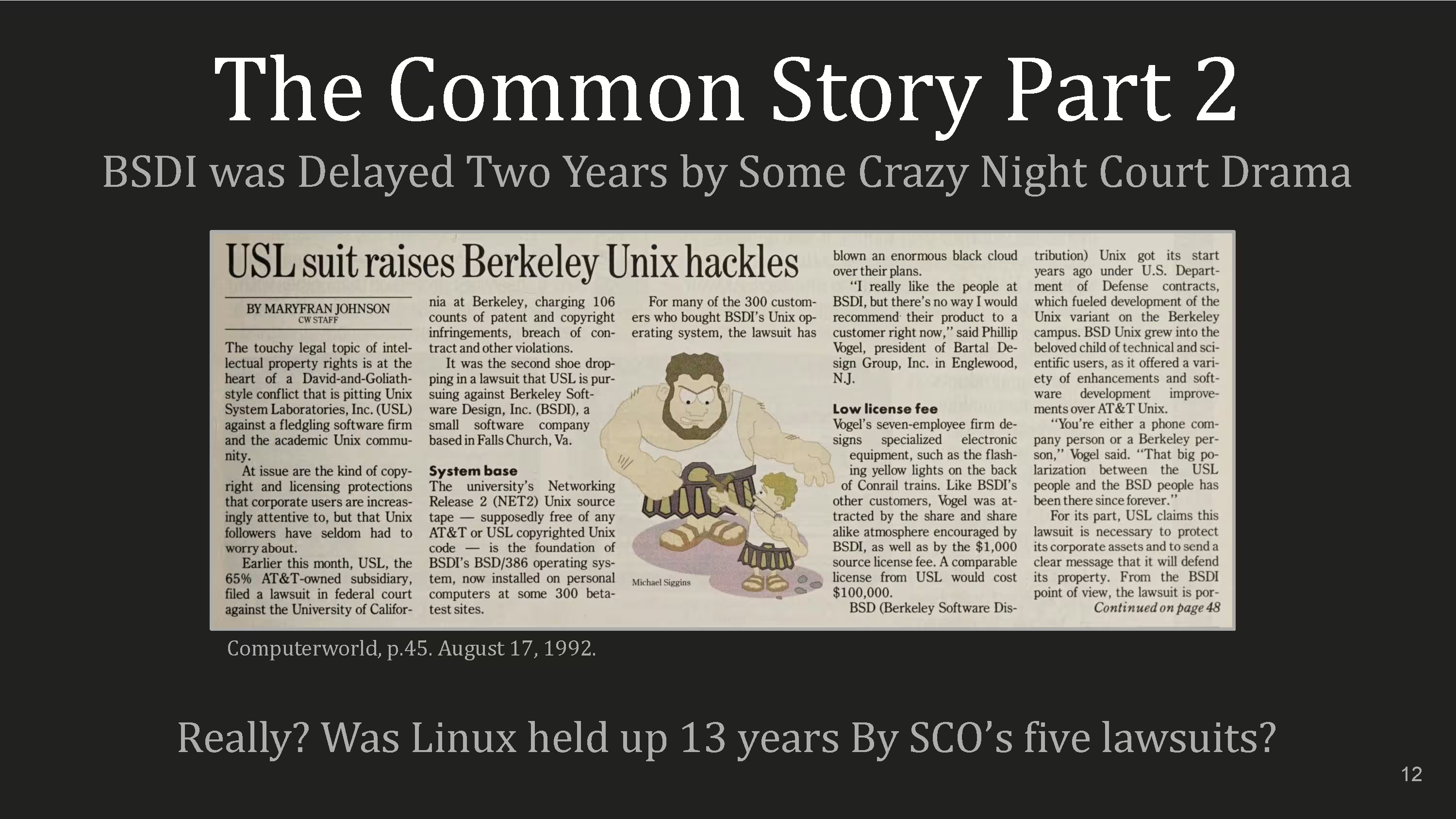

“But Mr. McKenzie there's more to that story! What about the BSD lawsuit?”

Well what about it?

The first BSD was released in 1978, but for our story, we’ll only need two names, a husband and wife team, Bill and Lynne Jolitz.

Why didn’t that win the UNIX war?

This is usually given but it's not persuasive. BSD is much older, and in an interview with Bill Jolitz in 1994, he says he started working on 386BSD in 1986 even though only Net/1 and Net/2 were released in that interim.

Also there were five lawsuits that held up Linux in court for over a decade. Did that affect its development?

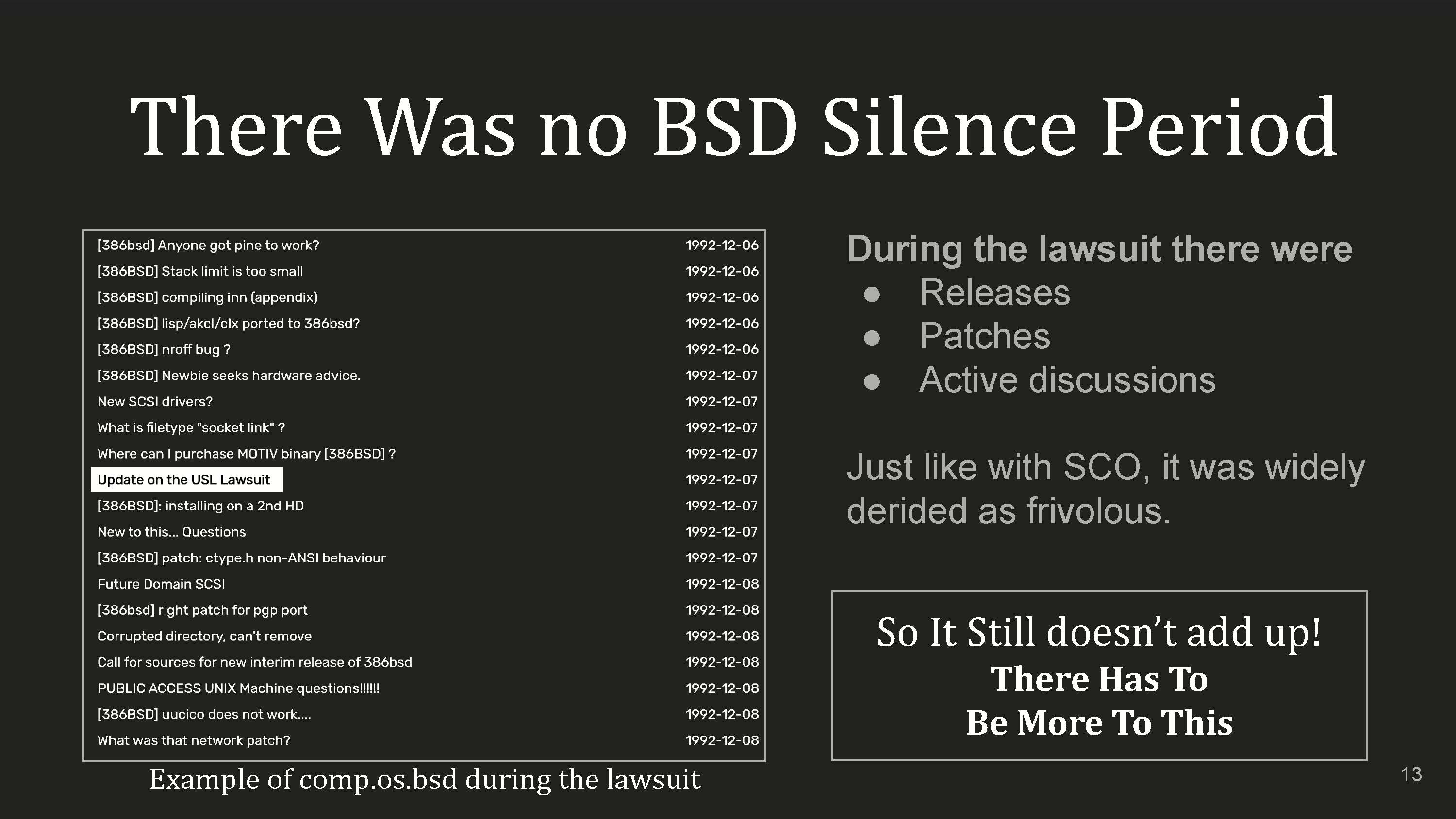

Ok, let’s assume there was a two year hold on BSD, then we should go over to say, USENET and see a fair amount of silence during this time.

But in fact, we don’t. This is just over two days - it was lively and vibrant. There’s no evidence of any chilling effect.

The highlighted post is an update on the lawsuit, amidst a bunch of articles talking about progress and bugs.

Mapping out the velocity of Usenet articles in BSD groups to look for change correlated with the court case activities showed nothing of interest.

However there were a number of articles across USENET with titles like “Linux or 386BSD?” where people compare them. Many included minutia such as specific hardware but none talk about the lawsuit.

Also, there’s a first-mover advantage theory where people write history from the first successful implementation and forget everything that came before. There were digital currencies before Bitcoin, MP3 players before iPod, and social media before Facebook. Linux’s timing was favorable but there’s more to success than that.

So we have to reject the hypothesis that BSD was held back because there is no contemporaneous evidence. Besides, NetBSD went out in April of 1993 and FreeBSD in June and they already had things like networking. The first installable Linux was MCC-Interim in February 1992. It’s really not that far apart.

Tell me how wrong I am, go to the URL and denounce me at your leisure.

Regardless, Linux has become a few orders of magnitude more prevalent than BSD and killed commercial Unix. How?

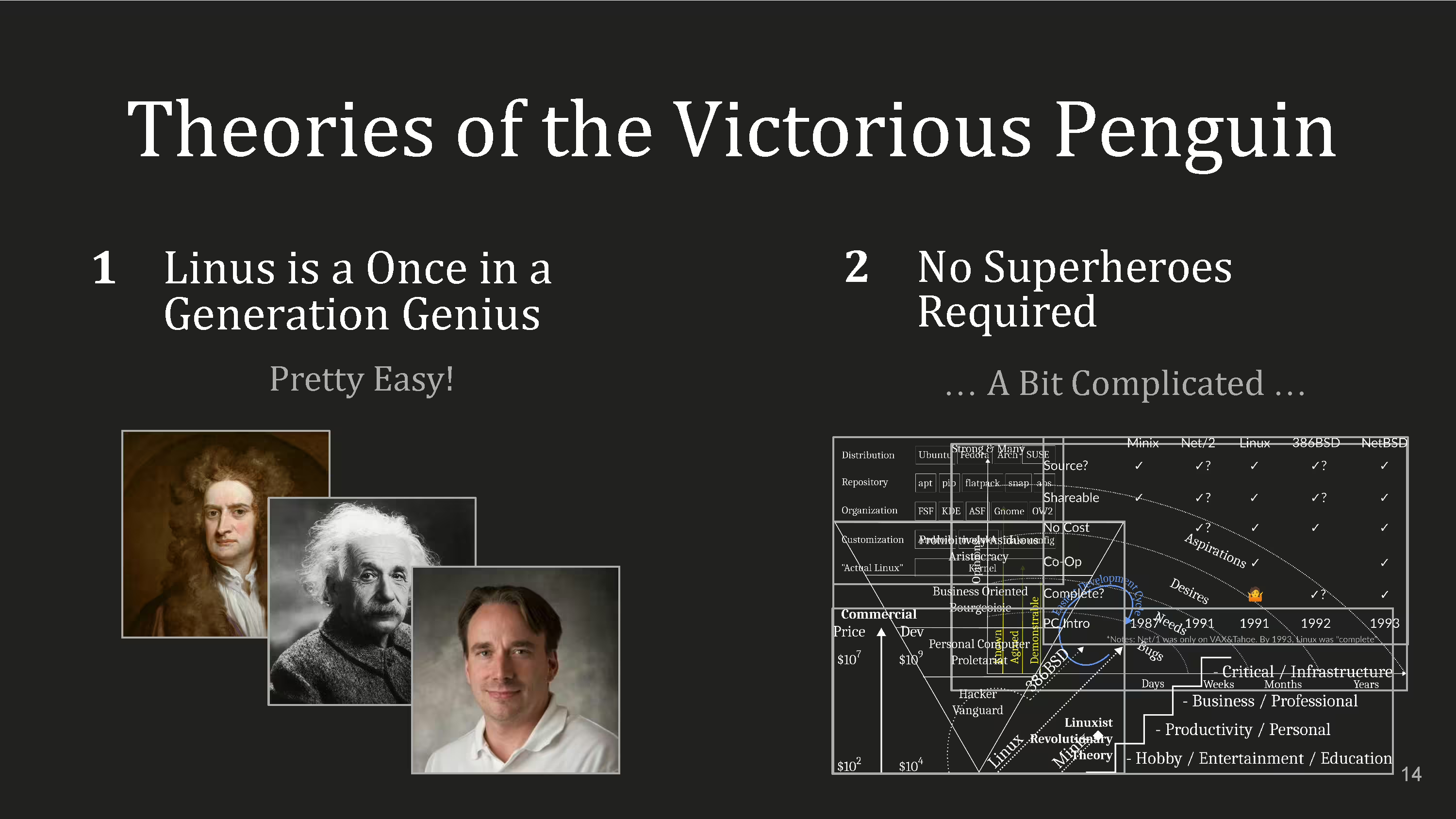

Here's two possibilities: The Great Man theory and a social history. The second is harder!

Let’s try the first, it looks easy! It says some glorious thing happened but you can't do it because you don't have the right genes.

Unfortunately, that isn't useful unless you enjoy sulking about things you consider flops. But you're all rockstars, right?

So what about the second.

Using syllogisms:

Mere mortals did this

We are mere mortals

Therefore, we can do this!

But only if we know how.

The first is a much shorter speech: a Carlyle style hero-worship about how great men should rule and others should revere them, a gushing mystical biopic!

However, we’ve got a much harder job.

And to do it we need defendable and falsifiable hypothesis.

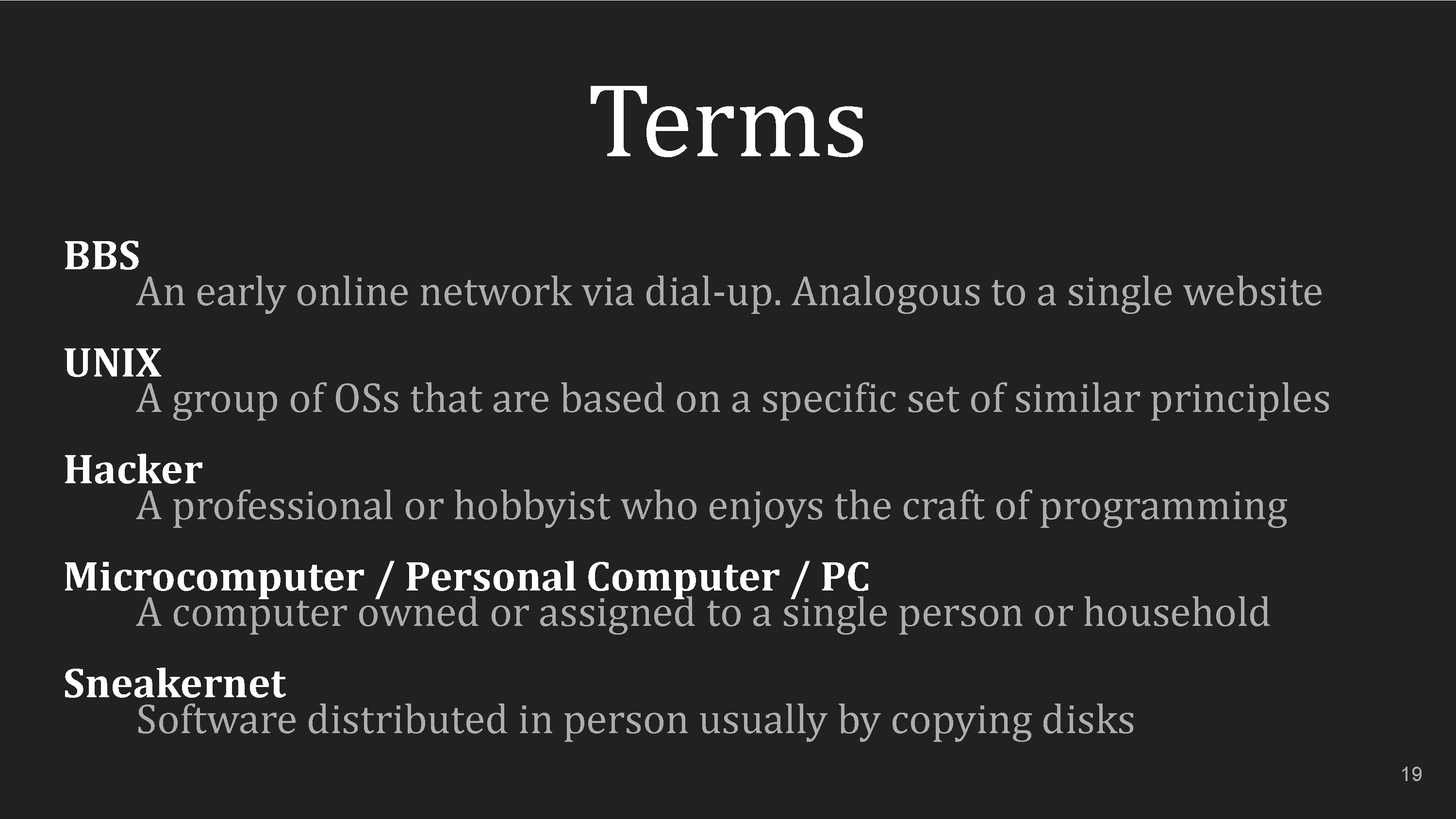

But first here's some more jargon.

Perhaps Linux was the 2nd act of the personal computer revolution: personal software on personal hardware.

If the center will not hold, things fall apart, and mere anarchy is loosed upon the world, we might as well make it productive and get some nice software out of it.

Maybe a free UNIX needed a pool of hackers to build it on cheap computers with a robust communication network and avoid bad actors taking advantage of their generosity.

Maybe. There's also the maybe nots, that falsifiable thing.

#1: UNIX was thought to be really complicated!

Actually it was seen as quite elegant and simple. The UNIX source license was $200,000 in the 80s so of course it was complicated and magical, but that was marketing. Even 386BSD, 20 years into UNIX, was done by the Jolitz, the husband and wife team, just two people.

#2: community based programs were thought impossible.

There's an organization called SHARE who came up with the Share Operating System in the late '50s which was community developed.

SHARE was eventually structured closer to a buyer’s cooperative. To see how it worked around the birth of UNIX, let’s go to Computerworld, 1968:

During the past four months Share has been working with IBM to define changes needed by users. When the company responded, 12 of the 13 requests had been turned down.

Well IBM, look what happens to you when you ignore the users!

Oh yeah, SHARE was Los Angeles based so LA pride.

Speaking of things that happened over 40 years ago.

Although UNIX started in 1969, the first paper went out five years later. Here's four principles extracted from it that will be useful for our journey.

- Leverage Don’t Invent

- Doing Beats Planning

- Needs Not Desires

- Use Early Use Often

Although each one may have its flaws, together they form a cohesion, even a synergy if you will!

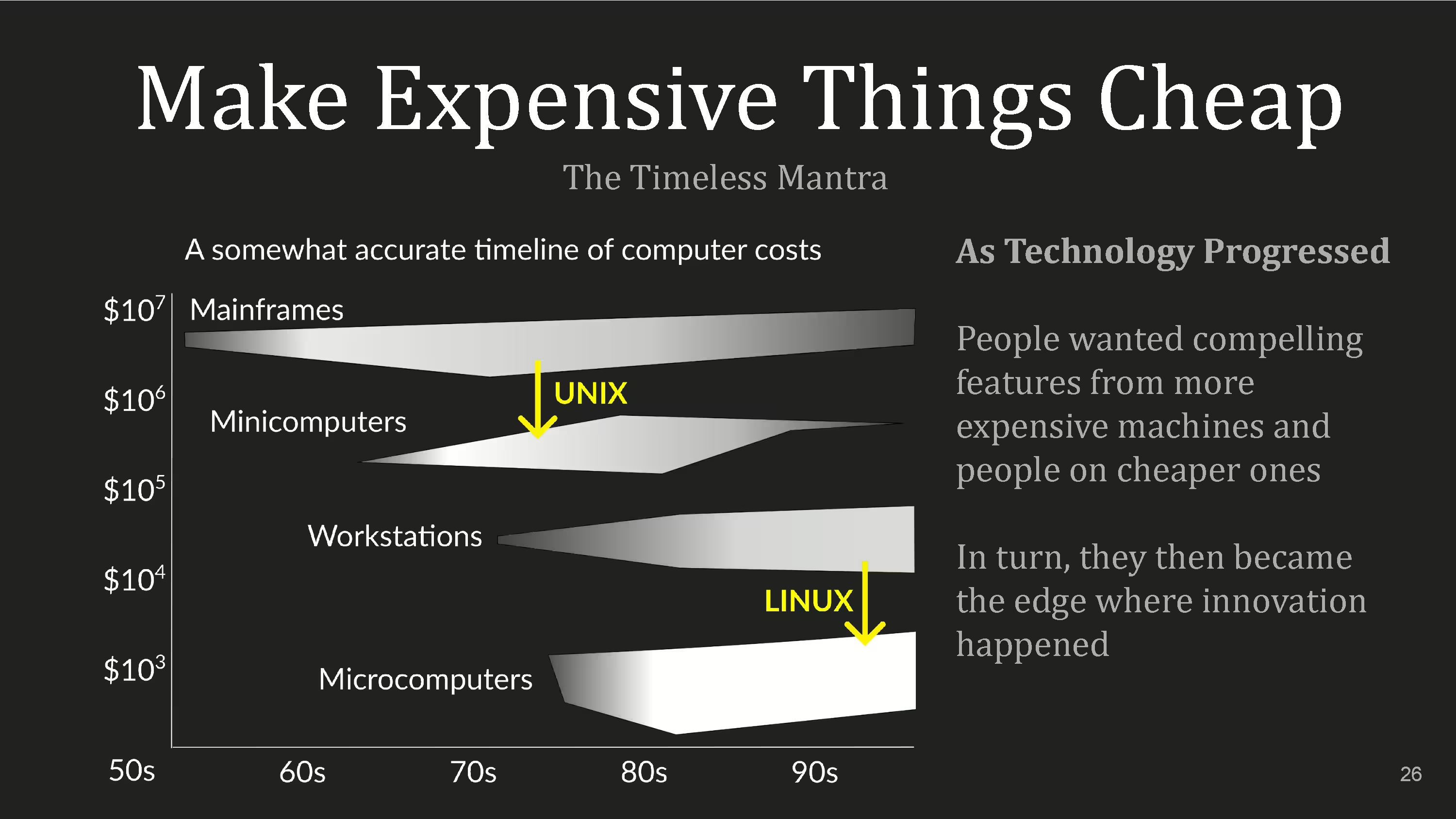

UNIX was designed for a dead class of computers called mini-computers. They were about an order of magnitude, or two, cheaper than mainframes and also less capable, well at first…

People wanted to solve their tasks on cheaper machines because that's all they had access to.

Roughly every decade a new, lower priced computer class forms based on a new programming platform, network, and interface resulting in new usage and a new industry. That's Bell’s Law and it's from 1972.

As minicomputers came on the market and became more powerful, UNIX was there to leverage them to service people's needs.

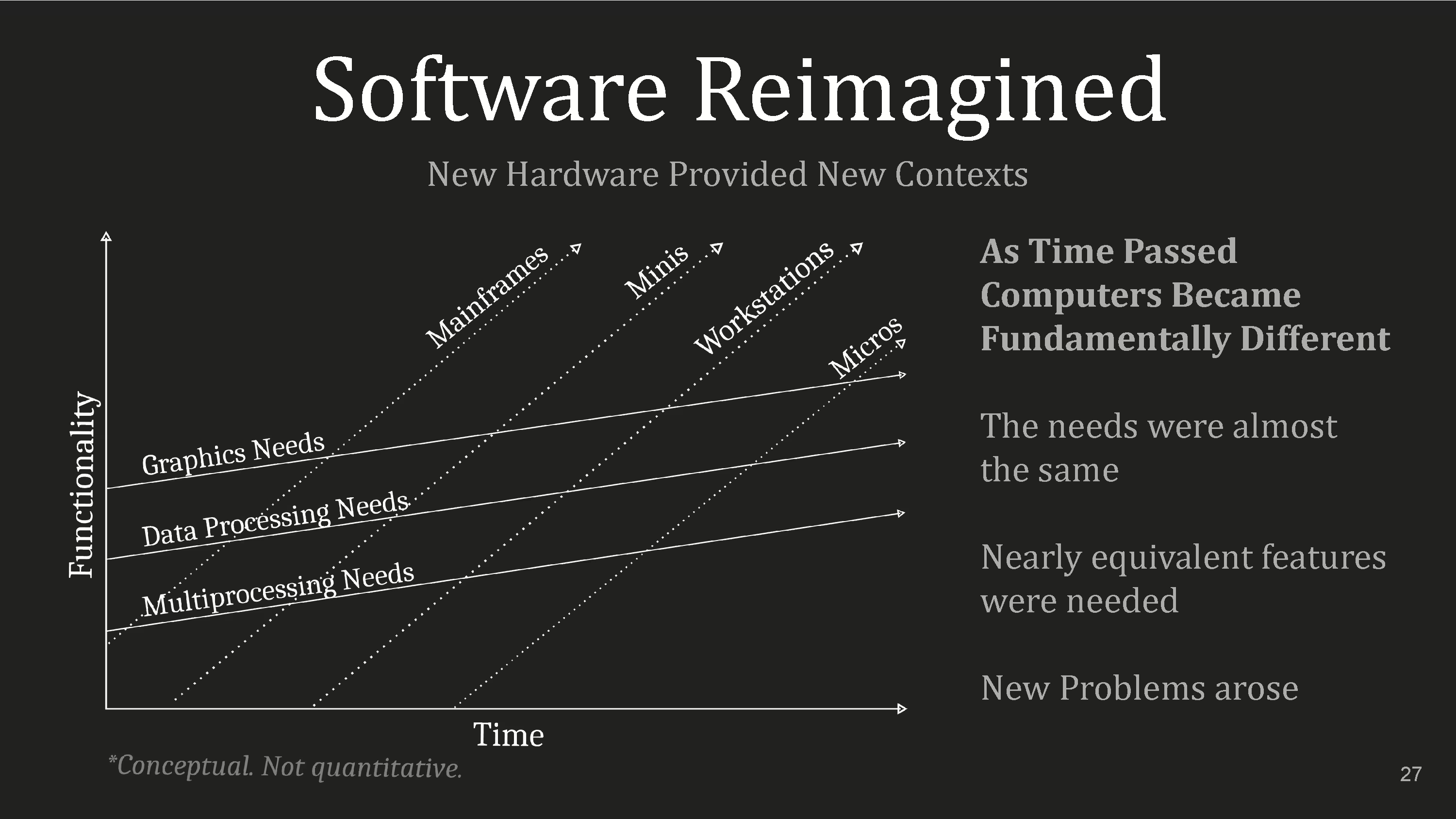

These new machines provided new contexts for new software.

These needs are only meant as examples. The point is the velocity of computer progress went faster than people’s needs.

Apparently computer performance increased as a square of the cost and transistors per chip doubled every 18 months. Those are called Grosche’s Law and Moore’s Law. Together, it meant the industry eventually ate itself through a multi-decade long digestion.

However when new things come in, the people who get there early don’t get there easy.

It’s ok though. Some of us are crazy and prefer it.

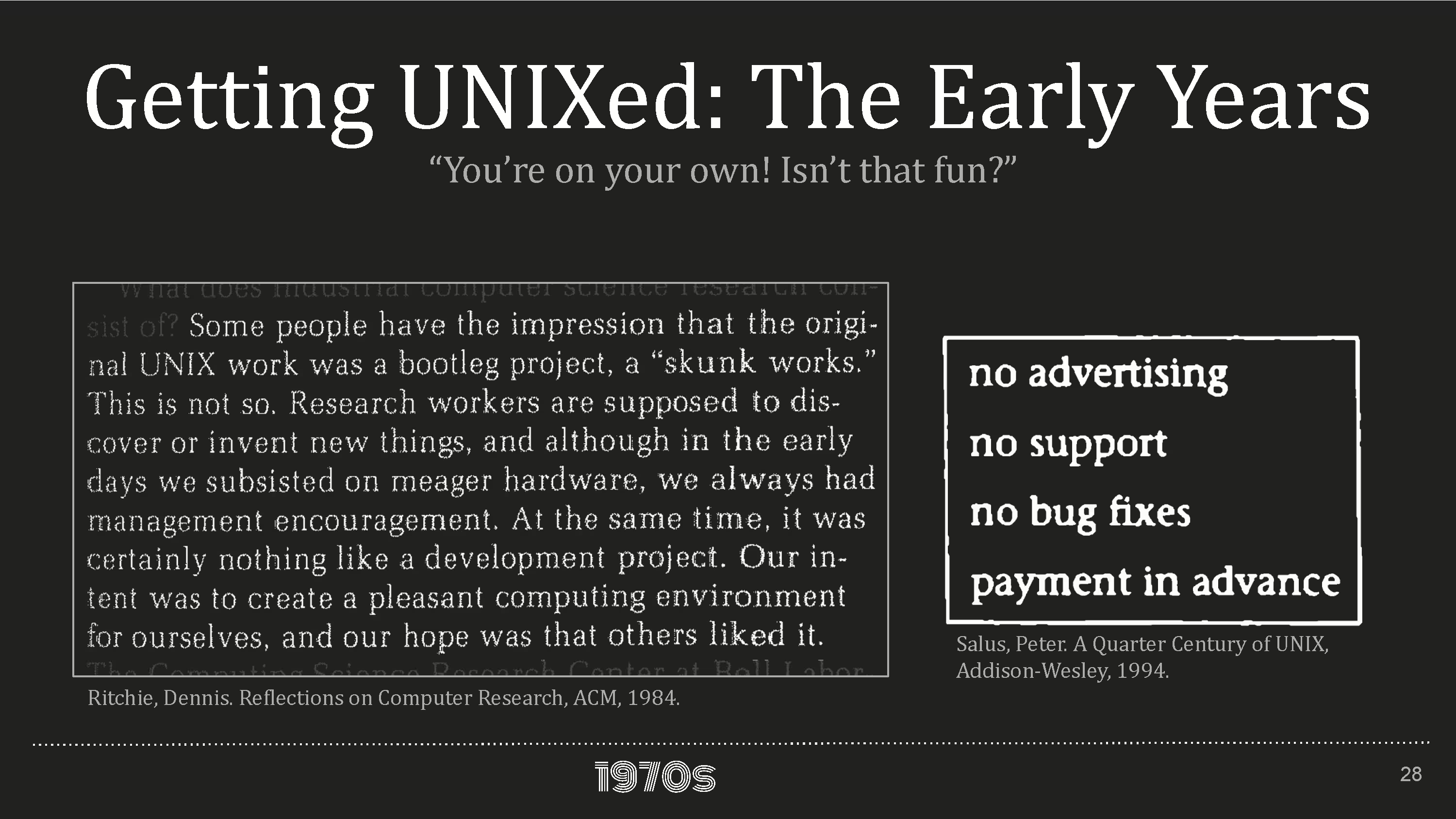

AT&T was under many legal restrictions and because of that, UNIX was initially distributed as if it came out the back of an unmarked white van.

The mantra was part of the presentation deck for early UNIX saying you're on your own!

Think of the adventure, the excitement!

But, who are you catering to with such a message?

Where will their behavior guide things?

It's not about welcoming everybody doing anything. With any of these efforts you have to ask yourself who you're bringing to the door and what kind of behavior you're expecting. That’s what separates a library from a soccer field.

for example, Linus, September 1992:

Minix is good for education. Coherent for simpler home use. Linux for hacking and 386bsd for people who want "the real McCoy".

Or a year earlier:

You also need to be something of a hacker to set it up, so for those hoping for an alternative to minix-386, please ignore me. It is currently meant for hackers interested in operating systems and 386's with access to Minix.

Maintaining focus is about intentionally, not incidentally but actually intentionally choosing what to ignore.

You have to be a clear signal amongst our noisy world.

Because you had to know what you were doing, UNIX was initially introduced through engineering and technical people and not through the conventional decision makers.

But it worked and by the 80s UNIX was mainstream.

As both the consumers and producers of UNIX multiplied, the suppliers differentiated themselves succumbing to the trappings of usual competitive markets by enclosing the commons: trying to take ownership of groups and commonly held things by setting up barriers thus locking them in and growing their base by trying to be special which led to fragmentation.

UNIX had infected everything from Workstations to Mainframes. Unisys, one of the mainframe makers, had an article in a September 1987 issue of ComputerWorld predicting a tripling in UNIX sales in that year.

The problem with the infighting goes back to the chart with various blobs about computer classes. The threat is almost always coming from below. In this case, it was Microsoft and micro-computers and not Nixdorf Sinix on an NS 32 thousand versus Clix on an Intergraph Interpro.

In 1985, Microsoft argued that their Xenix UNIX, which had an install base of 100,000, would be a multi-user version of DOS. In 87 they said they didn’t see it competing with their plans for OS/2, which at that time was seen as the successor to Windows 1.0. L.O.L

Things changed, specifically that December with the introduction of Windows 2.0 and later with Windows for Workgroups and NT. That was the real threat.

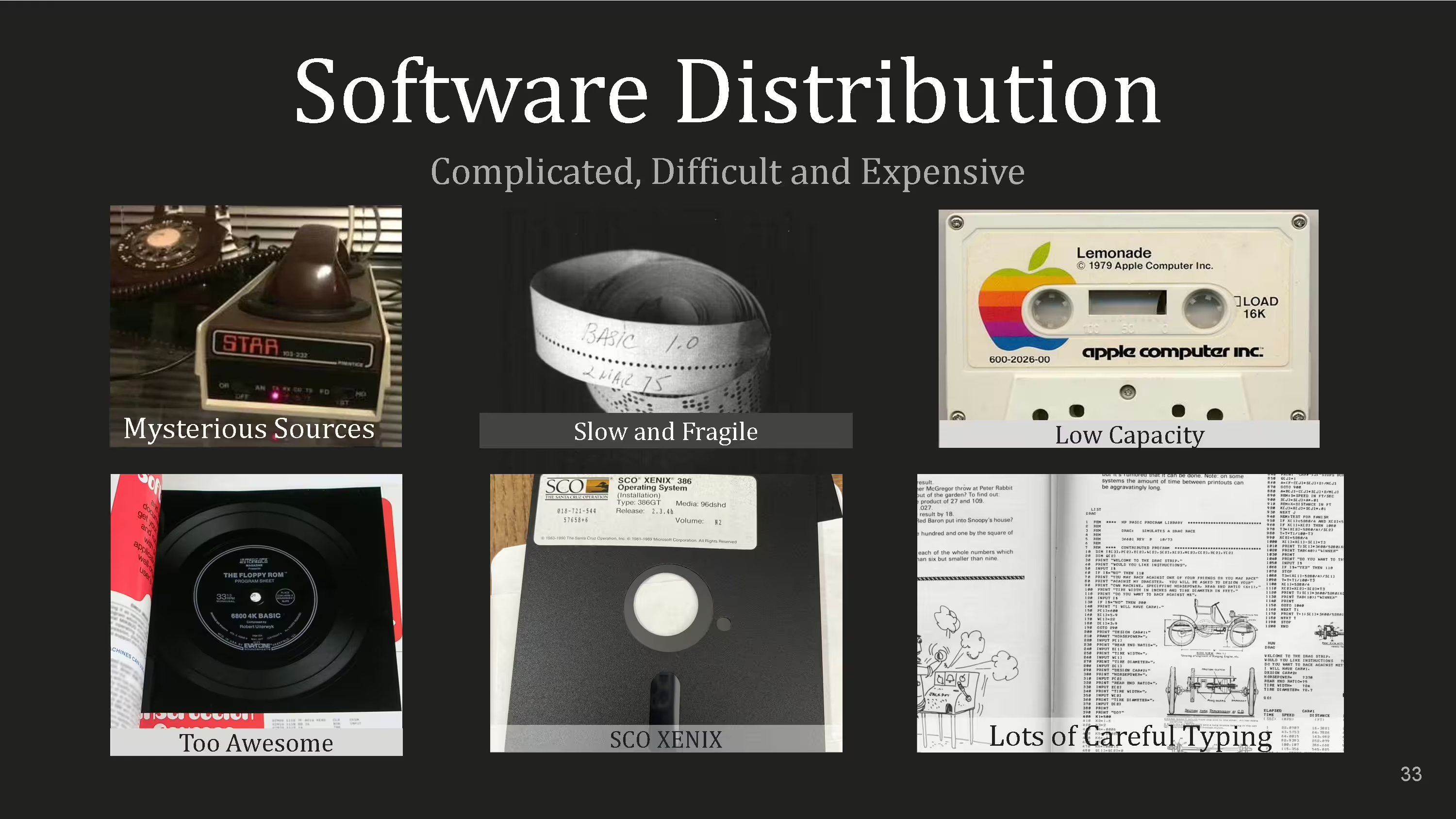

Anyways, Xenix required 12 360k floppies, which was seen as bonkers.

A 32GB MicroSD card is about 93,000 floppies, 190 meters stacked end to end - that’s like a 2.5 minute walk. All that storage is now the size of your thumbnail and costs as much as a taco on the west side.

Anyways, software distribution used to suck.

Speaking of Microsoft, that punched tape is how they distributed Basic for the Altair in the 1970s. It took about 20 minutes to load and it wasn’t stored on disk because the Altair didn’t have one. Just keep the computer on if you want to use the software.

The lower right is called a “type-in” program. Software was distributed like this as multiple pages of hexadecimal.

No, really. This is MLX format. They published listings like this every month. For years. You typed it in. There was no electronic reader. There's some BASIC code on the first page and it’s not easy either.

The QR code is this game running on an emulator in your browser. You can scan it and just tap on your phone. No typing required.

Anyone want to scan it and give it a try?

You could only use the software you had access to. There were also software user groups which were convenient for the 1 in 20 people who lived near one.

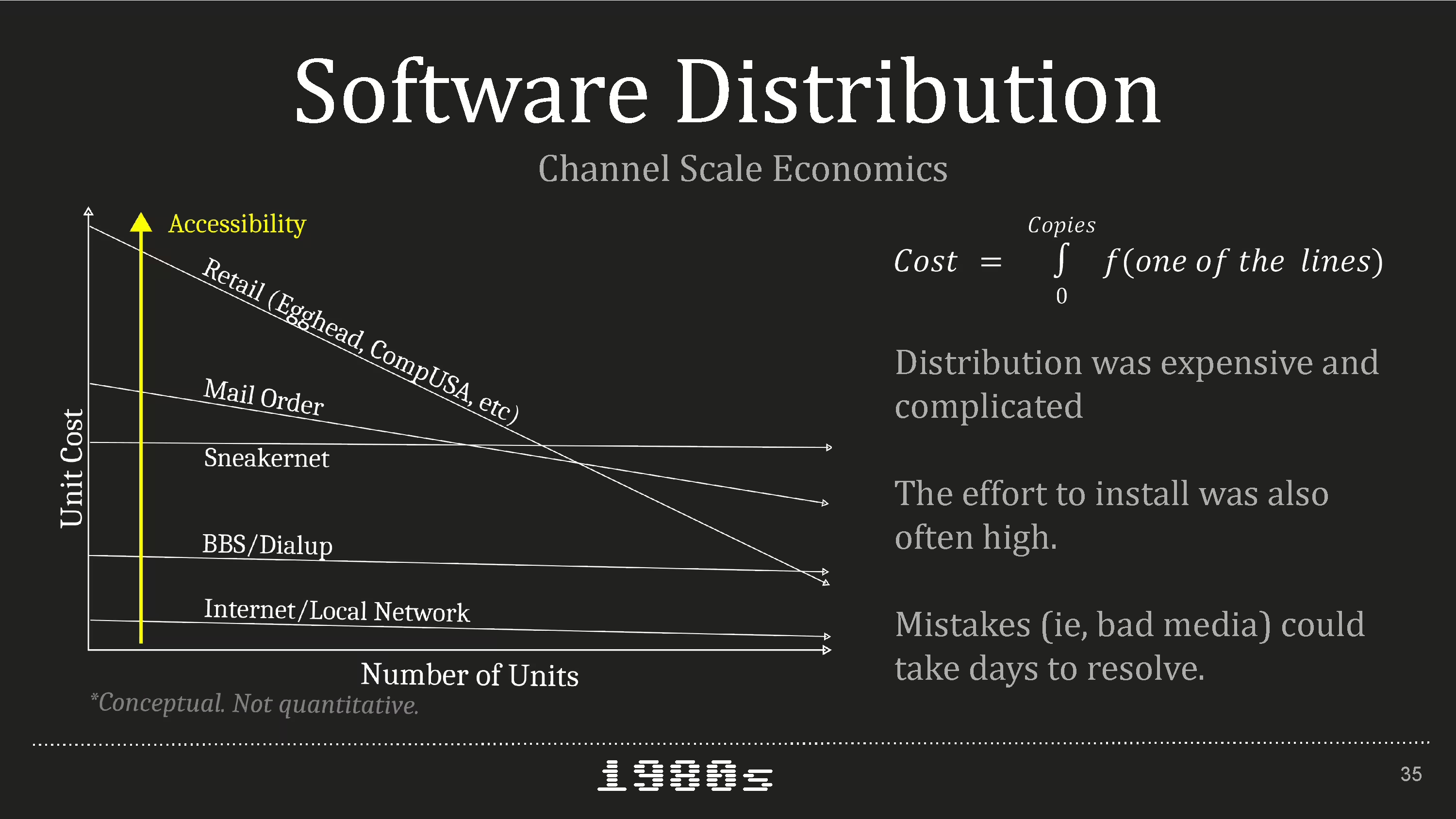

But for most, software meant retail, mail order or copying a floppy from a friend. The bottom two methods had consumer overhead: For dialup you usually had to pay an expensive phone bill and for Internet, you probably didn’t have it.

2400bps access to GENIE in 1989, for instance, was $42 an hour and that's how long it takes to get a megabyte over 2400bps.

This means most people were only exposed to the top three groups and it was difficult for smaller developers and non-commercial ones to get their software to the masses.

Paraphrasing Marketing High Technology, Bill Davidow, 1986:

It’s not a product without a distribution channel or in Linux’s case, a distribution. The technology may have the potential to address the needs of many but without specialists, read distributors, to put it in the hands of those customers, it will not succeed. Products are sold through distribution, not to distribution.

Let's jump forward to the “Why Linux” question here for a bit while this diagram is up. Linux had high accessibility of the install media. March 1993, UnixWorld listed FTP repos, BBS numbers and companies you could mail-order Linux from. Later you could find Linux at coffee shops, libraries, and attached to magazines at the grocery store.

Filling up a CD with a Linux distro and cribbing some How-To’s was cheap content for a magazine who just wanted to get an issue out. But let’s go back to the problems.

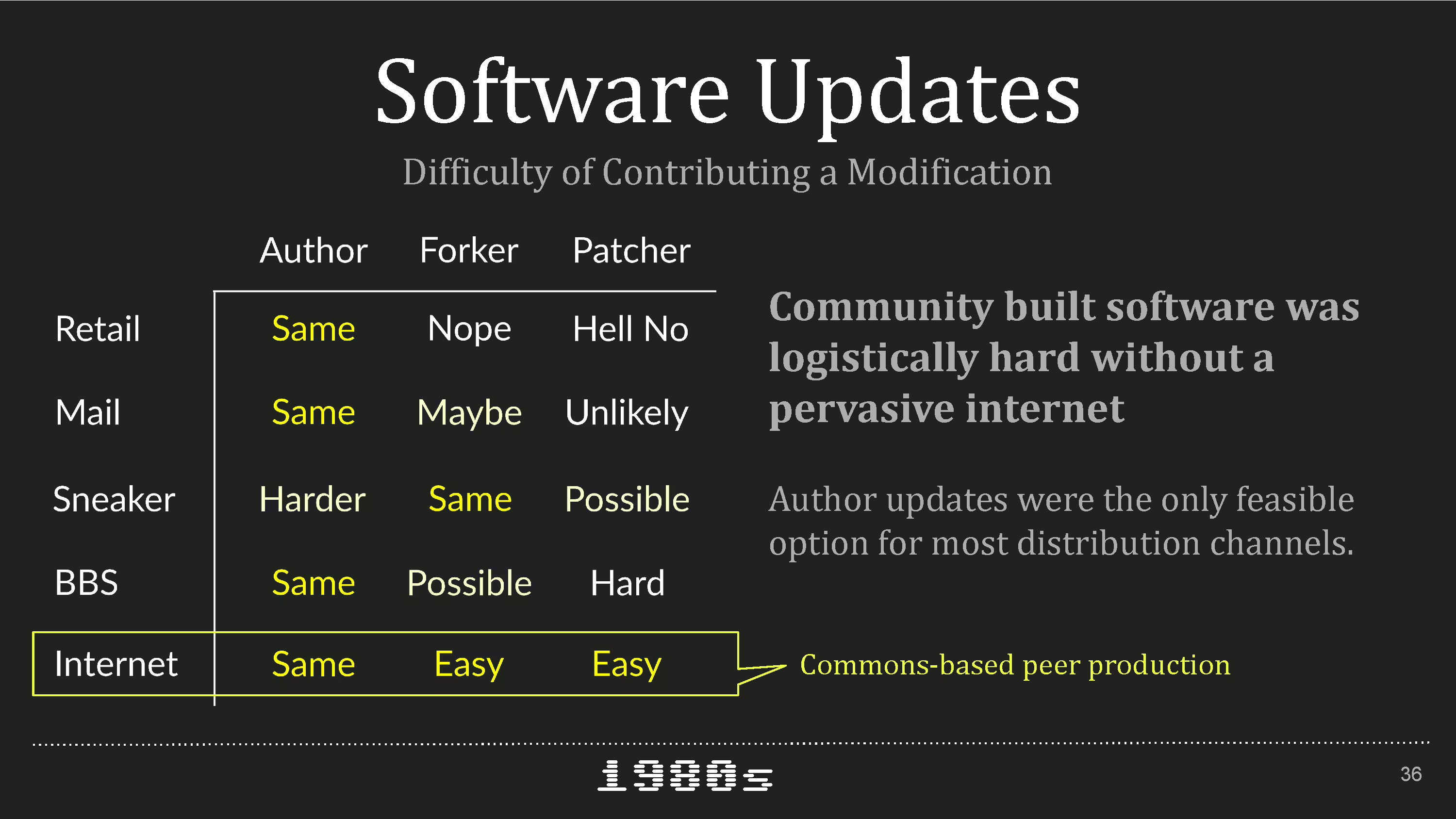

Depending on the distribution channel, updates had different logistic complexity - especially when considering updates from outsiders.

For instance, if you wanted to update the retail Lotus 123 and have your changes appear on the shelf at Circuit City, you'd probably have to do it yourself and get arrested.

It would have been fun though!

Once there were online networks with the storage and communication necessary to unlock multiplayer online mode for the software development game, a new way to write software was created.

Yochai Benkler described this as commons-based peer production in his book The Wealth of Networks.

Let’s go to Linus, 1991:

I've enjoyed doing it, and somebody might enjoy looking at it and even modifying it for their own needs. It is still small enough to understand and I'm looking forward to any comments you might have. I'd like to hear from you, so I can add your software to the system.

Adding Minix things to Linux is fine but how do contributions and updates work? Unless you can answer these questions clearly, progress will be slow, infrequent and inefficient.

Everyone has to agree on the terms and feel welcome otherwise they become petty and don't come around here no more.

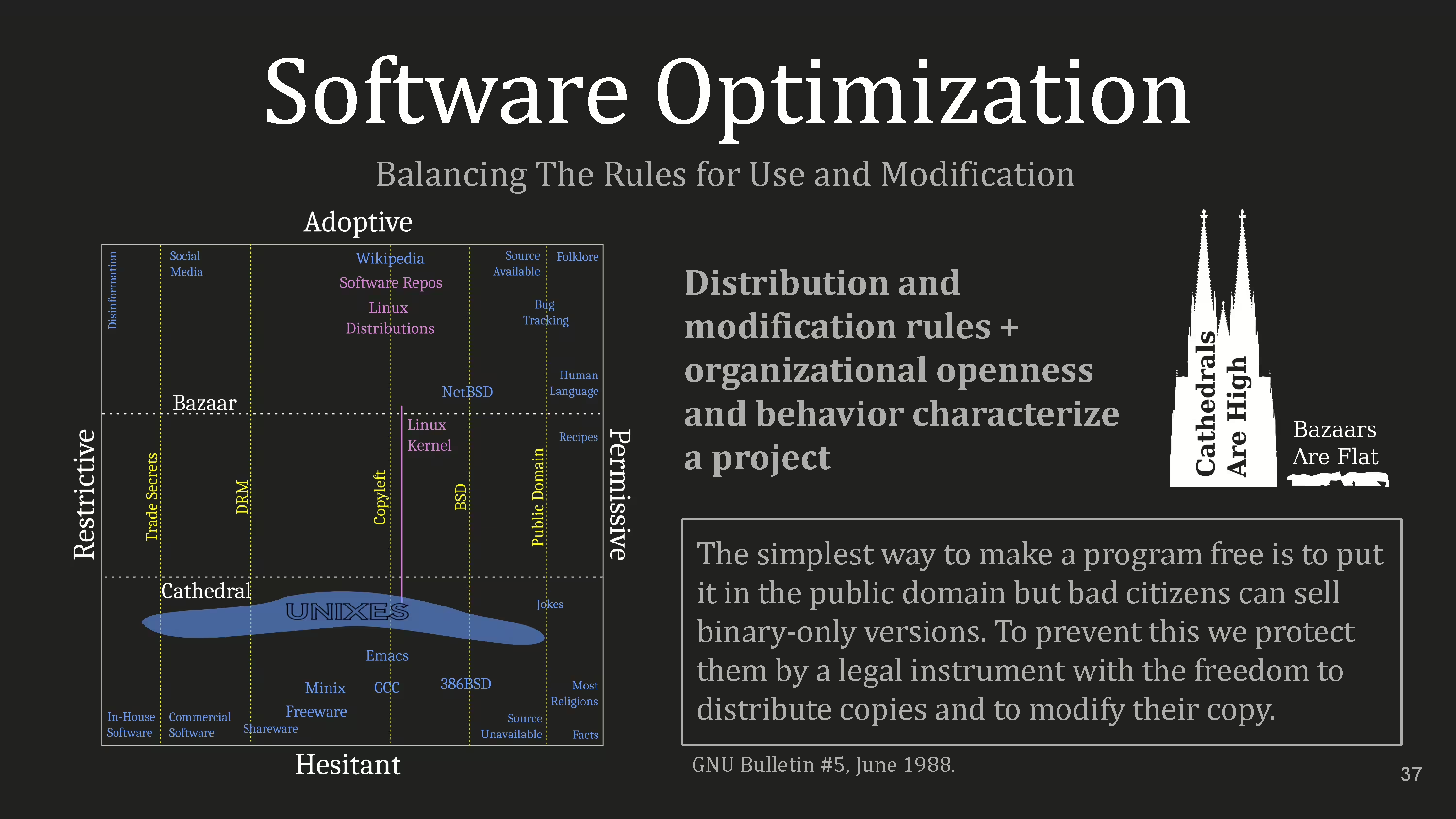

So GNU made a Rooseveltian list of four software freedoms to define “free software” but it’s not just license, it’s organization plus license. For our purposes we’re going to talk about “horizontalizing” software development, what’s labeled adoptive on the chart.

Essentially how easy it is for

- Modifications to become integrated and in the same distribution channels

- Other people to become a distributor or to share your product

You need a legal structure and organizational structure that affords this: an “open shop” that permits anyone to come in. Eric Raymond spoke to this in The Cathedral and the Bazaar.

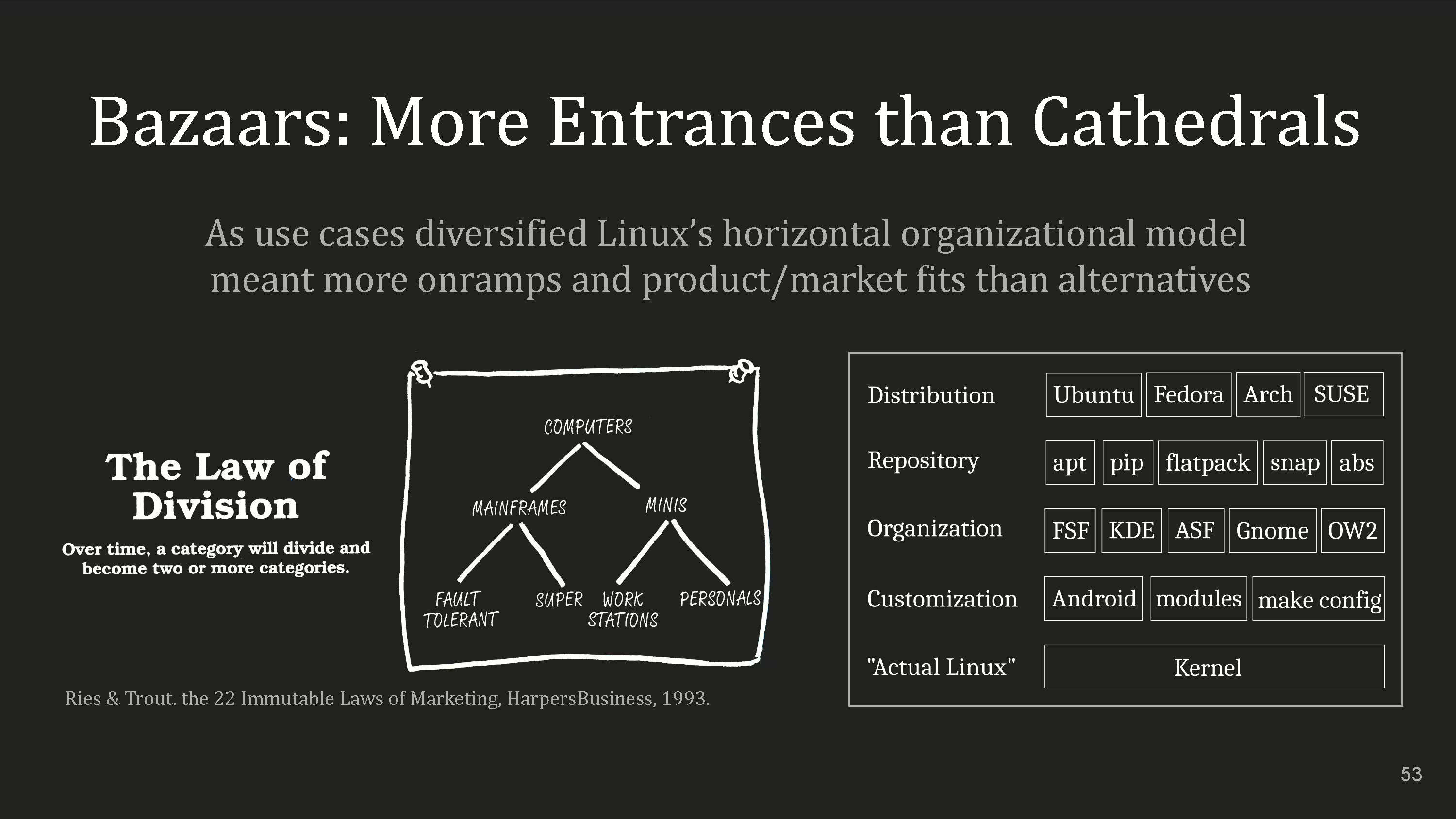

In the Cathedral model, which is tall, source code is available but development is restricted to an exclusive group. Contrast this with the Bazaar model, which is flat, in which the code is developed over the Internet in view of the public.

The vertical lines here, from permissive to restrictive, is a general continuum. Public Domain is seen as too permissive in the eyes of GNU because you can re-license and re-privatize things. I’ve also read that copyleft and GPL are different from each other but I never went to law school.

If you zoom in you’ll see non-computer things. Let's go over a few.

Bottom right is “facts”. People ought to be really hesitant to modify facts and they're public domain.

Above that you have most religions. Anyone can preach the good word and start a congregation but at least in principle, the texts are sacred.

As we go up we get jokes, recipes, human language and at the top is folklore, which changes readily. As you move to the left of Public Domain, you get Wikipedia, which anyone can edit. On the restrictive adoptive side is social media. Anybody can contribute but the platforms retain a bunch of rights. Commercial software is down in the hesitant and restrictive camp.

The pink stuff is all Linux related.

Minix is down next to Freeware and GCC in the hesitant category below the pool labeled UNIXES. Except for Social Media and Wikipedia, all of this is early 1990s and not 2024 accurate.

Speaking of the past, let’s go to the introduction of Minix.

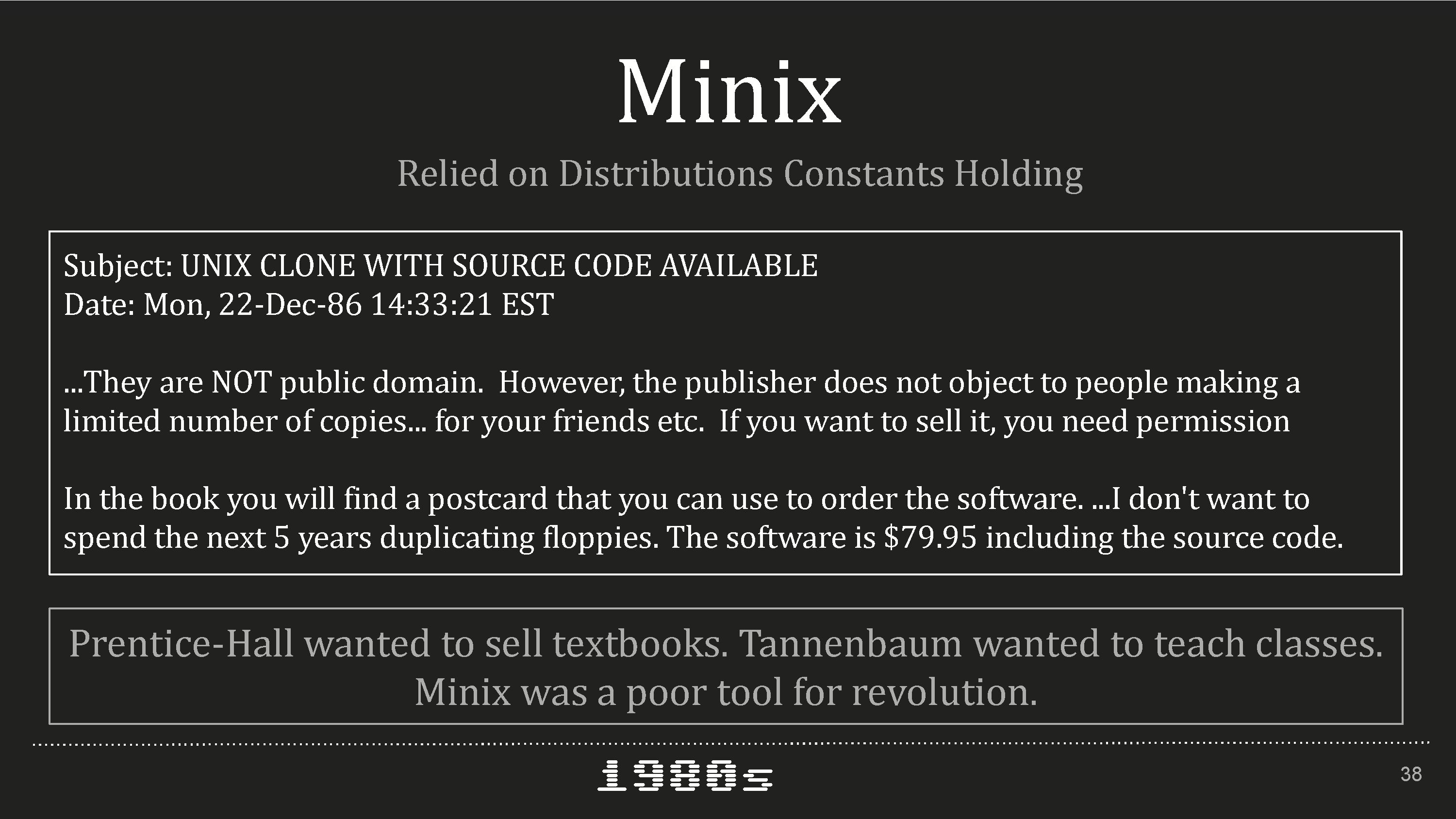

In 1986, commons-based peer production wasn’t feasible; people didn't have the internet in their pocket. Because of the prohibitive logistics around software distribution, updates, especially customer initiated, Minix could only be a cathedral. Also, it was a product of Prentice-Hall who wanted to sell textbooks, and instructional material for Andy Tannenbaum.

So here’s the relevant parts from the announcement. It’s not public domain. However, the publisher does not object to people making copies for friends, this is sneakernet. If you want to sell it, which is the two categories above sneakernet, retail and mail order, you need permission.

In his book you will find a postcard that you can use to order the software … there’s our mail order again and he says he doesn’t want to copy a bunch of floppies, as in, do sneakernet.

Now Minix was adjacent to GCC, what’s up with that?

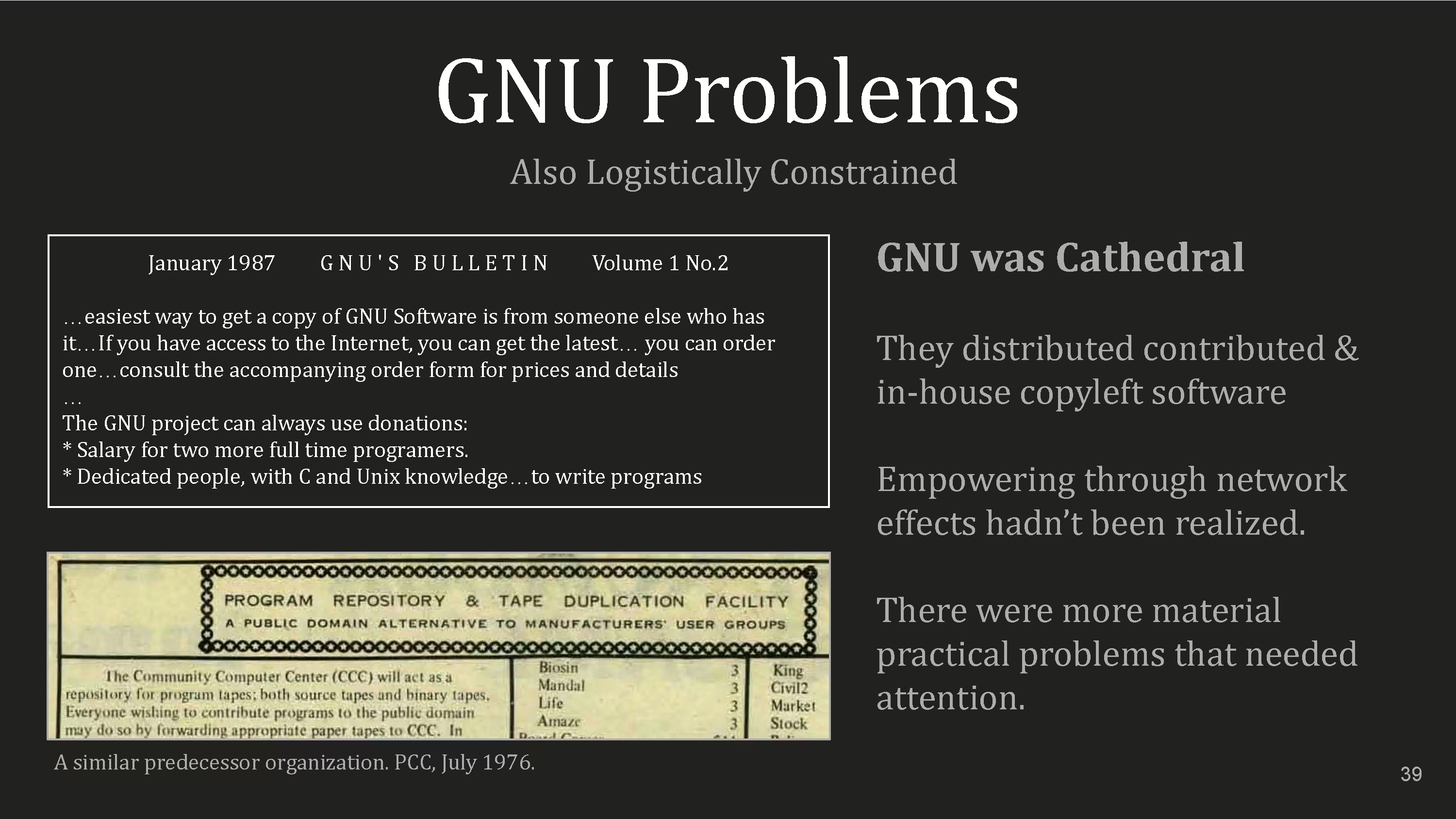

GNU was also a cathedral. Here's an example from an early GNU Bulletin, 1987. We can see it listing out different distribution channels, as they solicited funds to pay programmers to write more software, like a software engineering firm.

They played a second role as a provider of copyleft and eventually software under the GPL which came out a few years later. Every bulletin had a growing list of software by others that you could order.

You could get Net/2 BSD and then BSD-4.4-Lite from them until 1995 because Linux came from below and GNU got disrupted like everybody else. Then GNU started backing a Linux distribution which ended up breaking up with them called Debian.

As far as distributing free stuff goes, there's precedents such as this one from 1976 trying to be a repository for public domain software, from smaller producers such as code published in say, Dr. Dobbs Journal.

Some assembly programs ran over 40 pages long in that publication that you of course were expected to, type-in yourself. So people were looking for a more reasonable solution that didn’t cost much but not all the pieces were there yet.

All of these things required labor, coordination and resources. How we instrument those means some things get better faster.

Ideas are bazaar even when their implementations are cathedrals. For instance, there were many graphical desktop computers even though each one was a proprietary restrictive project, the superstructure of desktop computers operated in the adoptive space.

Pretend we have a concept of a Free Operating System. We may not get all the parts right the first time but the platonic ideal exists, well kind of. It's as much a contemporaneous invention as anything else existing as a pale true thought suspended in our life stream.

We can go back to Vannevar Bush’s Memex article, “As We May Think”, in the Atlantic from 1945 to see the spirit of what we now call many things.

Unlike products, platonic ideals are collective projects owned by nobody. They’re permissive and adoptive and not static abstractions divorced from human influence. They’re ideas manifested from contexts. The map is not the territory and in our industry we are tasked with creating both through pure thought stuff.

What a responsibility! We better organize!

This is about the complexities of building software and not about electing people.

You may have heard that commercial software began with Bill Gates’ “Open Letter to Computer Hobbyists” in 1976 where he denounced sneakernet distribution of Altair Basic because he didn’t get paid for each of the copies.

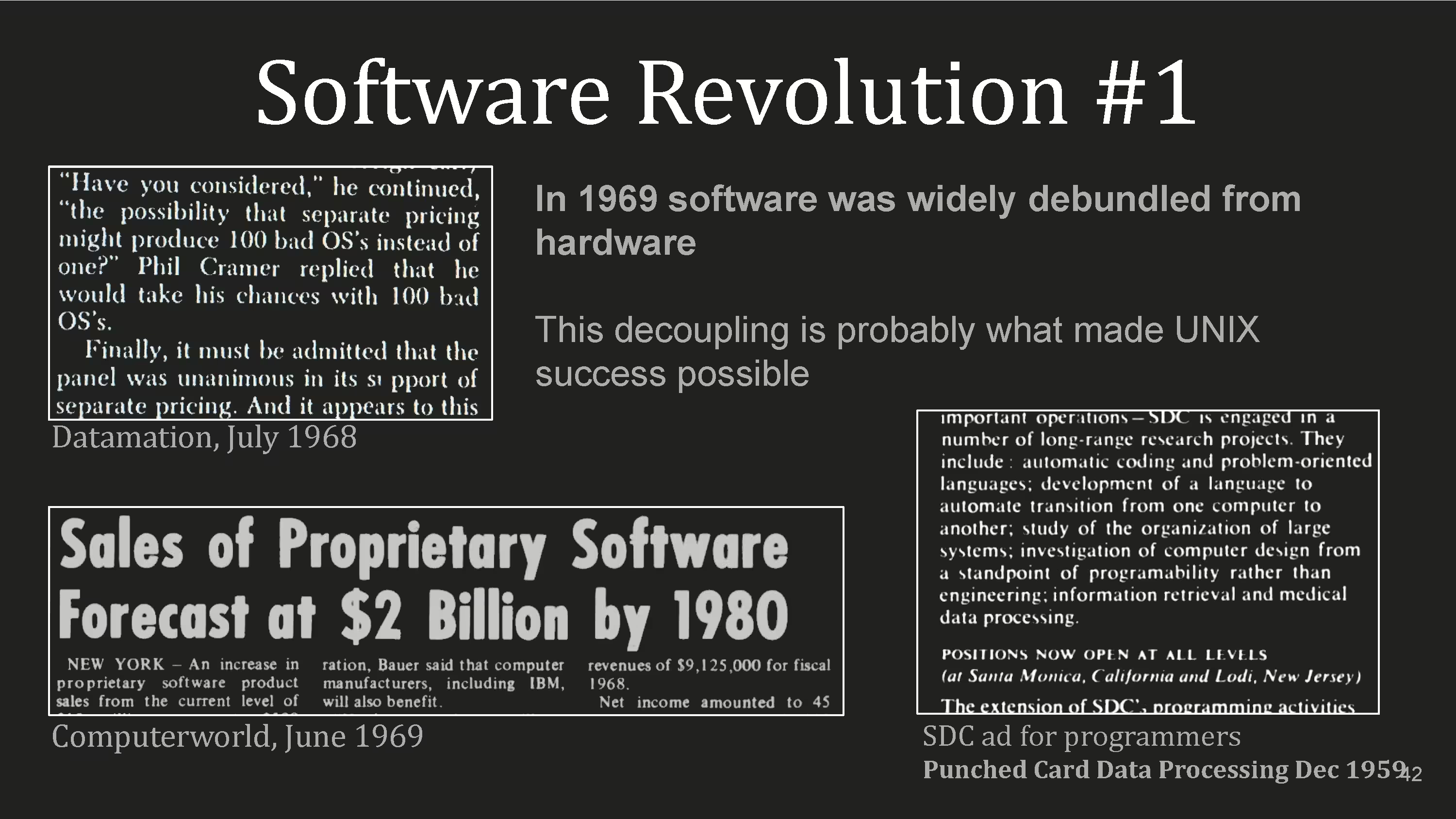

That’s not really when commercial software began. In the mid-1950s, software was in-house, coupled with hardware, or contract based as you can see in this 1959 ad for a programmer from Punched Card Data Processing.

But the version of commercial software that has products for a computer you already own has existed since at least 1960.

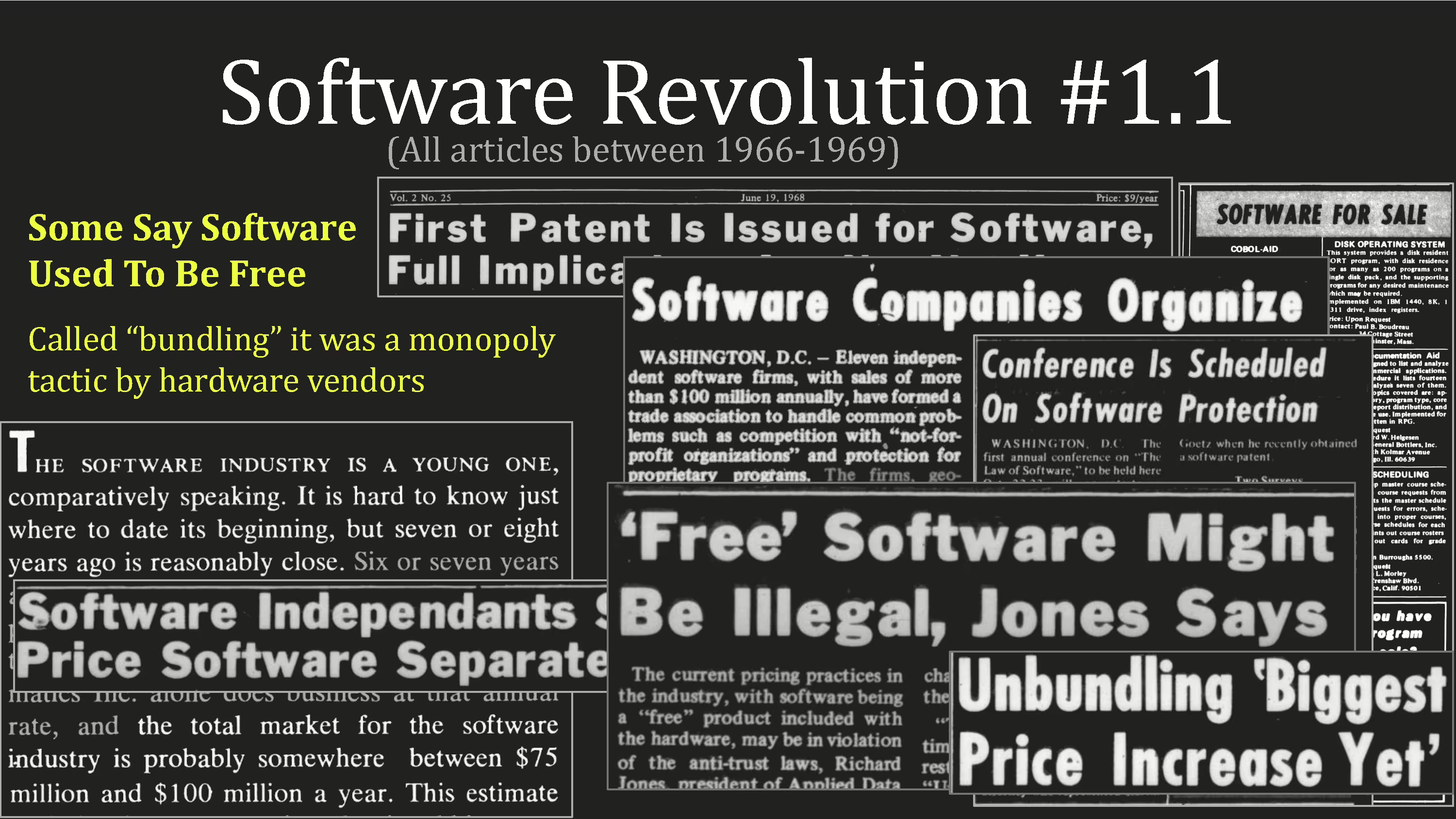

No really. This is another falsifiable thing: Bruce Perens chapter in Open Sources, Stephen Levy in Hackers, they allude to a garden of Eden in the past from which we have fallen where all software was free.

Really?! Well no. Here, 1966 claiming commercial software was a $100 million industry. And then in 1969, 11 companies worth at least $100 million each got together to form a trade group.

Hardware vendors bundled software to lock in their customers like Microsoft did with Internet Explorer. It was as free as Windows is on a retail laptop. People were trying to DRM and patent software back in the 1960s and more than one succeeded.

Maybe there was some idyllic Valhalla in the bubble known as the MIT Artificial Intelligence Lab, but the real world was up to the same tricks they are now.

Anyways, software unbundling happened in 1968 and 69, vendor by vendor and UNIX arose from this primordial soup and is one of the oldest non-mainframe software that is still recognizable today.

Recognizable because the family tree looks like a European dynasty.

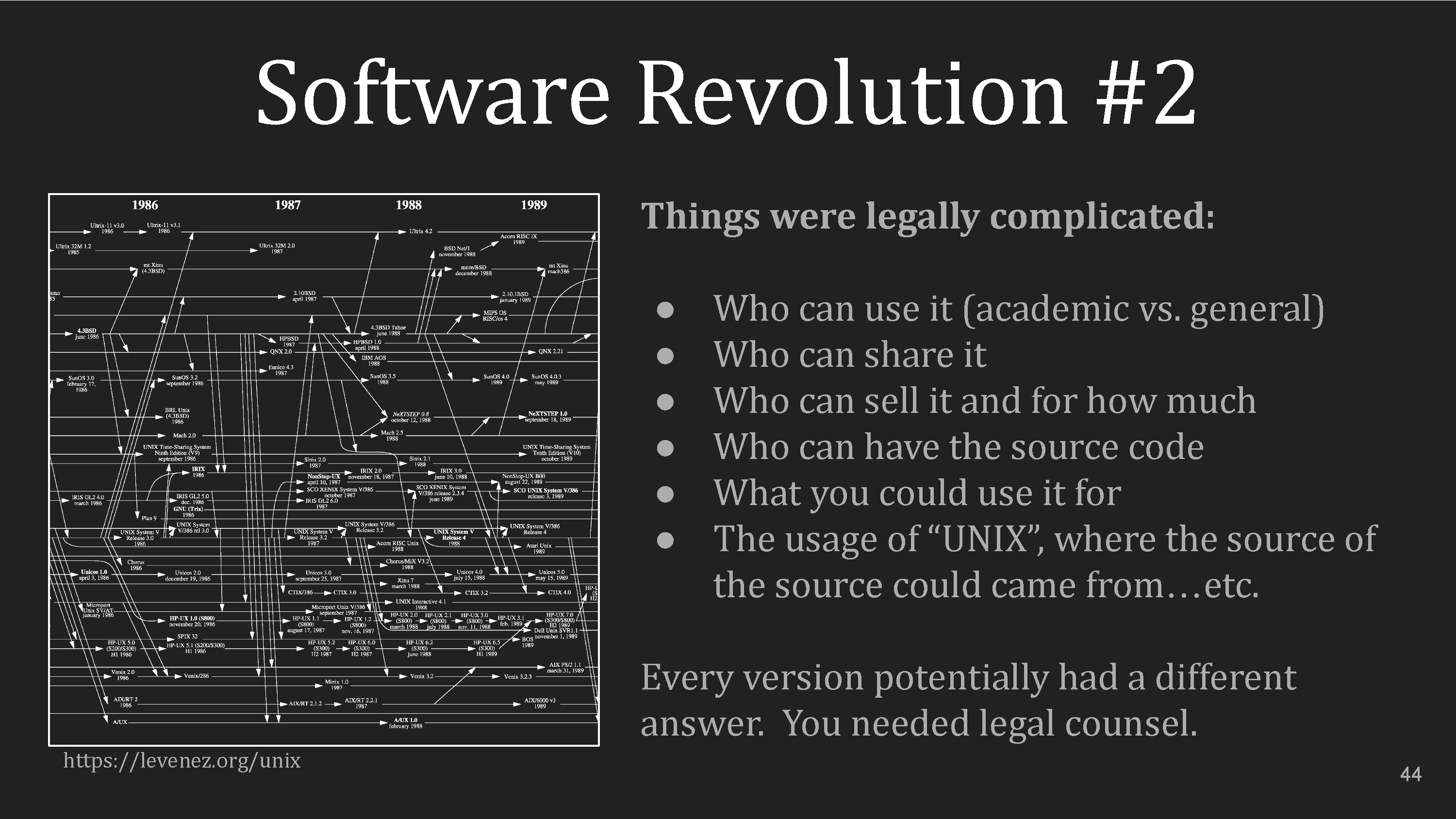

This UNIX timeline covers a few years of the late '80s. Zooming in you'd see Minix, MIT Trix, SunOS, Mach, Xenix…from the House of Free Software or AT&T or the Kingdom of Microsoft.

I'm still not a lawyer but this is really confusing, not only did the source and binary have different terms but sometimes only certain organizations had access to them.

For instance if you were an educational institution with a pre-existing AT&T license then you had access to BSD, I think? So a version might have multiple parents, each with a different term sheet. It's a legal Hapsburg.

That's why a few slides ago there was a puddle labeled UNIXES. Even the UNIX term itself is a confusing mess. Raise your hand if you think it's copyrighted by AT&T.

No. 31 years ago AT&T sold Unix Systems Laboratory to Novell who then donated the UNIX copyright to the X/Open Consortium which merged with OSF and became the Open Group which is now a standards organization. Some Linuxes have gone through that process so Linux is now a UNIX.

Really, you're just finding out right now?

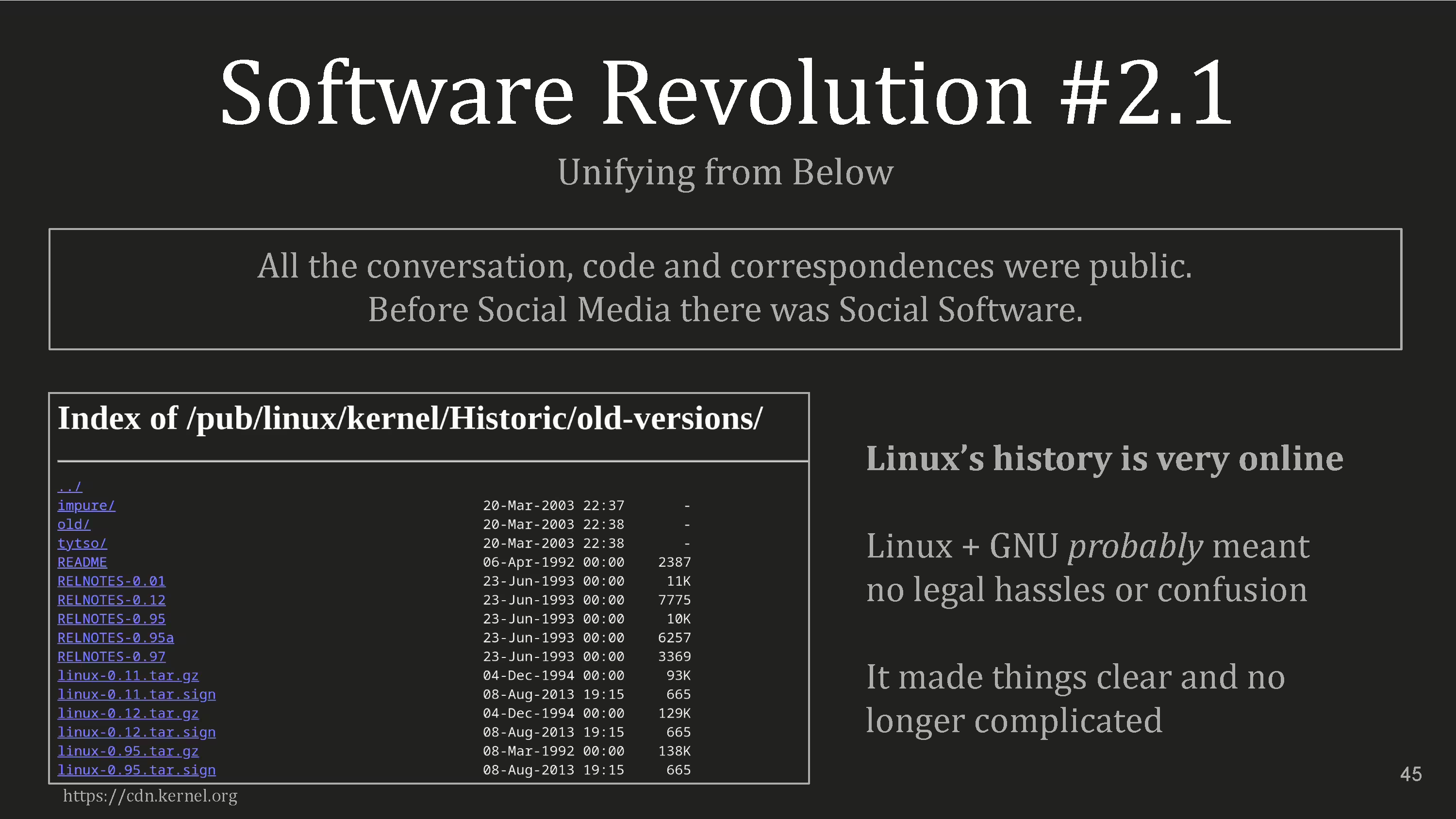

This stuff is absurd. We need something with clean, documented history, a clear license, and easy answers to all of these problems.

So Linux and effectively all modern open source projects have this solved. All development is open, the history is well archived, and OSI approved licenses solve the family tree problems. The structure that's now ubiquitous is one of Linux’s major contributions.

Also let’s talk about the internal politics of building software.

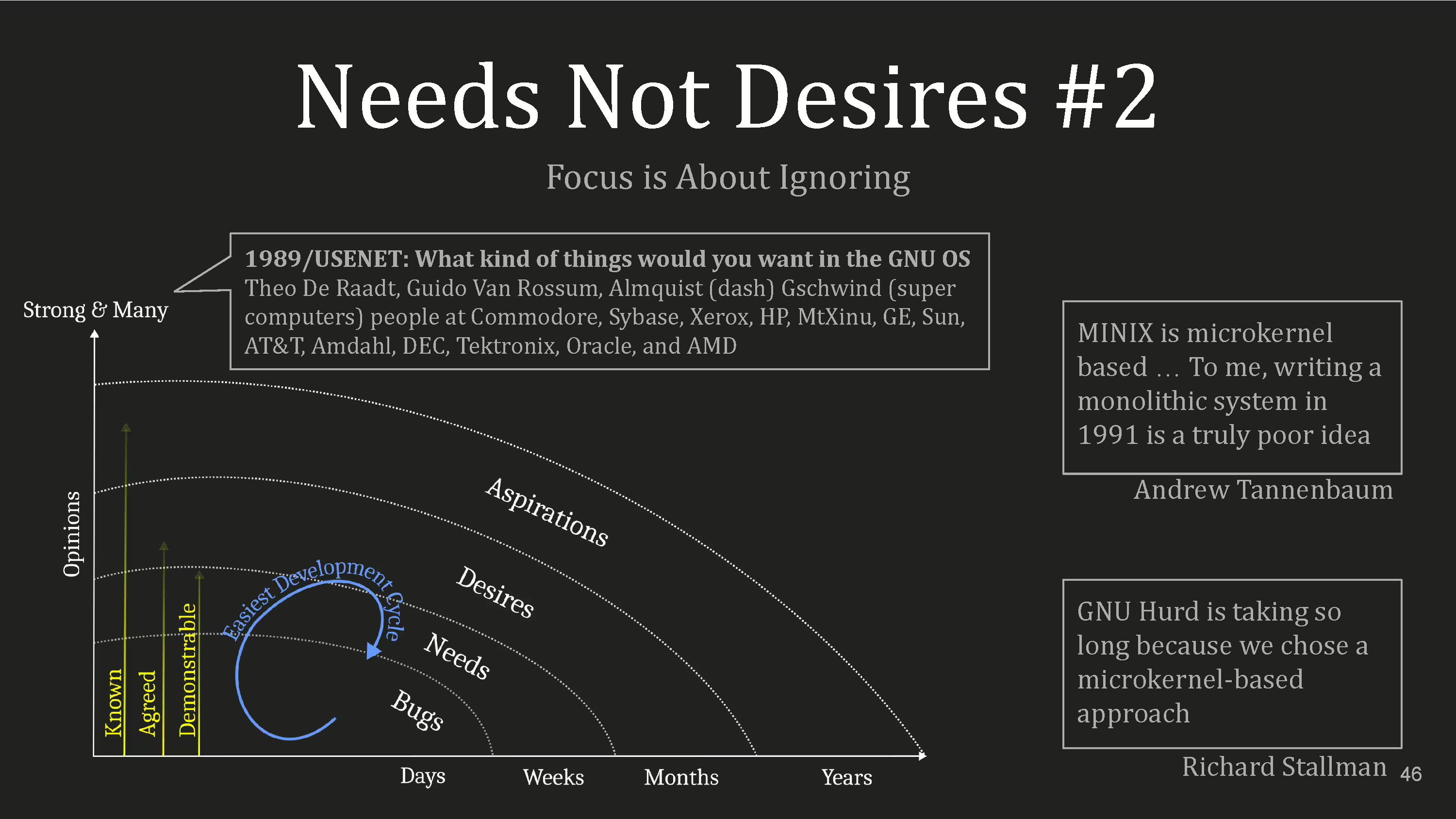

In the abstract penguin slide we covered the outside aspirational curve where things take decades and opinions are strong and many.

Things like paradigmatic ways of doing open source software development took 20 years to dominate because the longevity and applicability of the more abstract solutions is on the same time frame as their implementations. But within that exists lower-level Maslovian motivations.

And keeping things there makes them more actionable. Let’s say your network card isn’t sending out packets. We can say this bug is known, agreed upon, and demonstrable. So although it may not be easy, the labor path is traversable.

A new network card comes out, you need it to work on Linux. That’s a need. You can demonstrate and come to an agreement on what that would look like.

Pretend you want that network card to do something it wasn’t designed to do. That’s harder to demonstrate and agree upon.

To get that actionable you need to pull the desire into the lower curve so that a development cycle can encompass it.

It’s worth noting the Tannenbaum-Torvalds debate from 1992 to illustrate this. Tannenbaum chastised Torvalds approach because it wasn’t a microkernel and Linux was exclusive to the 386. Really Linus was in these lower curves and Tannenbaum was trying to pull it up to the higher curves where things move far slower. That’s where the hot research always is - people trying to make these higher level concepts more real.

GNU/Hurd is a microkernel approach. Stallman claimed in the early 2000s that’s why it was taking so long and wasn’t very stable.

The higher level curves are unlikely to succeed except as superstructures of the lower level functions in the same way that our asymmetric approach to platonic ideals happens on the back of incrementally more appropriate implementations which is why you can snake a line from 1950s IBM SHARE to GitHub.

Through that process of clarifying the aspirations, they get moved to the concrete as they become material needs and bug problems.

The clarity of the present stands on both the triumph and wreckage of the past. For example, the Mach 3 micro-kernel led to Pink, NextStep, Workplace OS, Taligent, and eventually XNU which is part monolithic and is now the basis for macOS. To get there that curve burned over a decade through multiple companies and billions of dollars. Also the OSF group I mentioned before had a Mach-BSD hybrid named OSF/1. Apple was going to use it in an alliance with IBM but that got canceled. It went on to become Tru64 whose last major release was in 2000, 24 years ago, to add IPv6 support.

How’s that transition going?

As a cynic I tend to know the cost of everything and the value of nothing. Oh, speaking of costs!

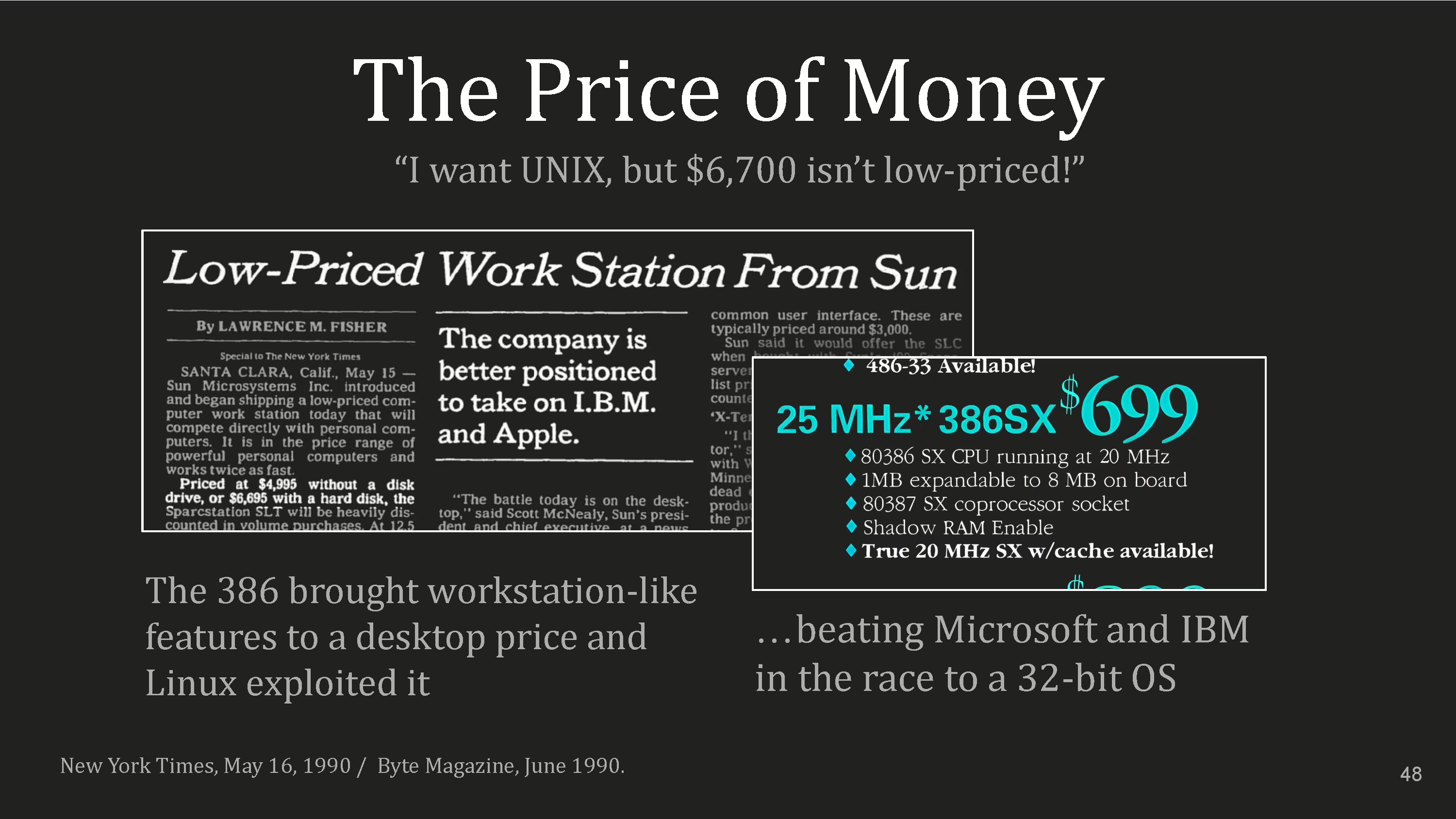

Here's both an article and advertisement from May 1990. Sun says they have a cheap $6700 computer. Here's a PC at about 1/10 of cost which is fine, if you can do some of the same things.

Historically that answer had been no, but referring back to the chart with needs and computing classes, this dynamic was likely to change soon. Especially since the 386 had technical features which made running UNIX-style operating systems more practical.

Let’s also talk about the price of Linux. Zero right? Well, no. You were paying hourly for dialup internet access. But between 1989 and 94 that price dropped 90% and speed quadrupled which meant $100 will now cost you $2.50.

You could also get Linux on floppies or CDs initially priced like cheap commercial UNIX. SLS was 100. Nascent was 90. But GPL allowed anyone to distribute Linux so these prices collapsed quickly.

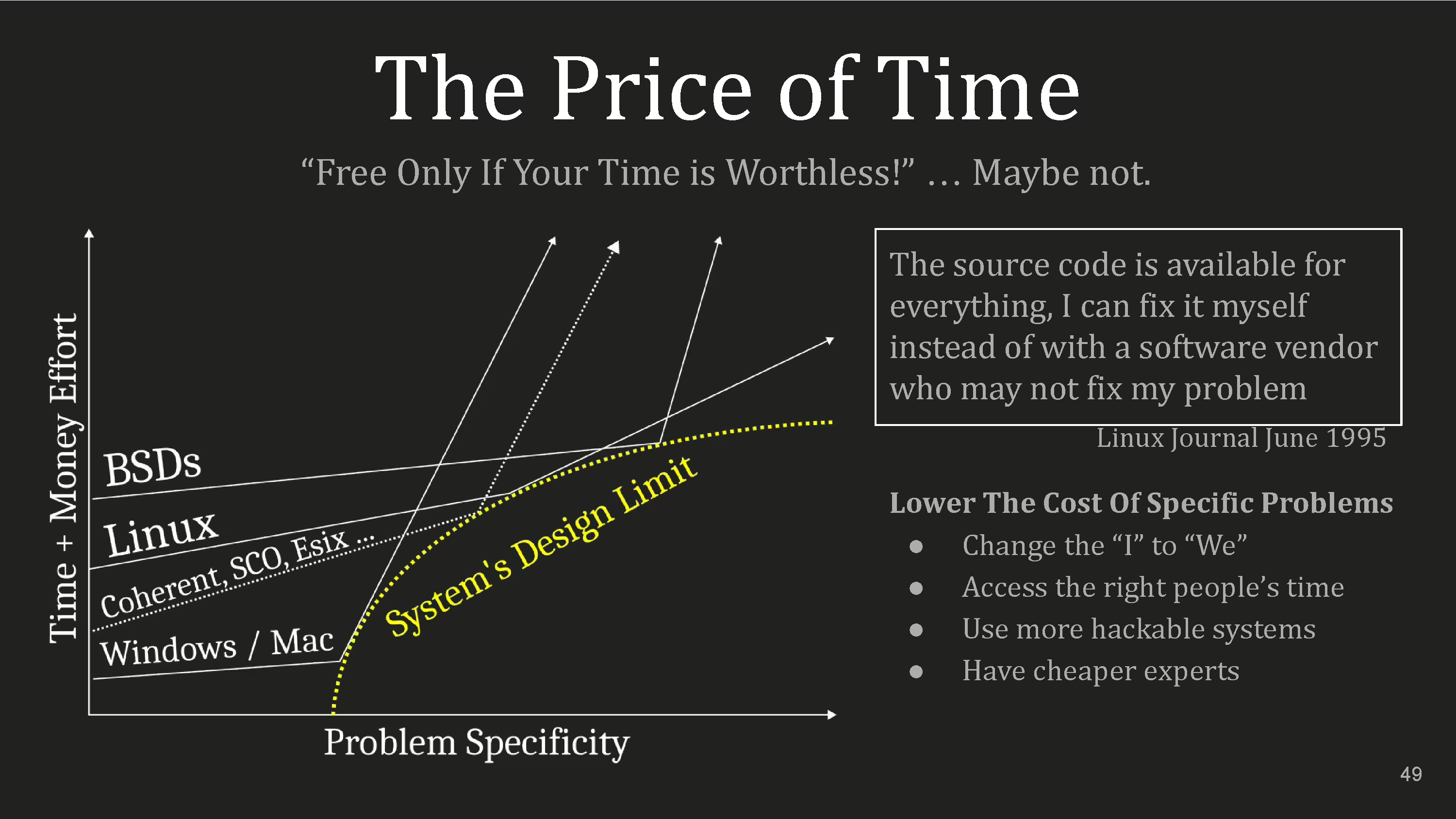

But that’s not the only kind of price there is, there's also the price of time.

This is a conceptual and not a quantitative graph.

Essentially Windows is the easiest but let's say you wanted to convert a bunch of files, sort the contents, pick a field, extract values, do some operation on them … these things get hard quickly.

Early on the BSDs were a pain to set up but they had networking before Linux; they were more professional. However there were fewer people working on them who tended to be more experienced, so they were busy and expensive. Linux was mostly students which are famously cheap.

Linux was also heavily hobbyist oriented which usually meant the systems were more hackable. The other UNIXes presumed you had something important to do.

The more difficult and time consuming it is for a customer to use an information system, the less likely it is that they will use that information system, that’s Calvin Mooers, 1959. As the complexity of the problems become more intractable, the approachability of the lower levels and internals of a system become increasingly important because people tend to use the most convenient method in the least exacting mode available and stop as soon as minimally acceptable results are found. That’s the principle of Least Effort and it’s also how hacking works.

Hackers tend to build worlds where everything is possible but nothing of interest is easy. Alan Perlis called it the “Turing Tarpit'' in 1982. Pulling yourself out of it from a capable to usable system requires some engine to push forward.

Commons-based peer production can also be seen as “Prosumer”: a producer and a consumer put together. It’s used in social media where you are consuming other consumer’s productions.

Here our engine is problem/solution/integration which keeps us in the bugs/needs development cycle. With the bazaar method of development, the changes reflect back quickly which means that new users have fewer problems with their different hardware so that for other people with say, your network card, it would just work.

But where do you find the people to do that? Well you build armies of course!

Large networks are important: their utility scales quickly with the size of the network because the combinations of groups grow faster than the utility of the groups that joined. That’s Reed’s Law.

For example, we start using Linux. You have a network card that doesn’t work. You now join the subgroup of people, becoming a “we” that needs this card to work. This only becomes an engine if we have the right people to complete the cycle we talked about.

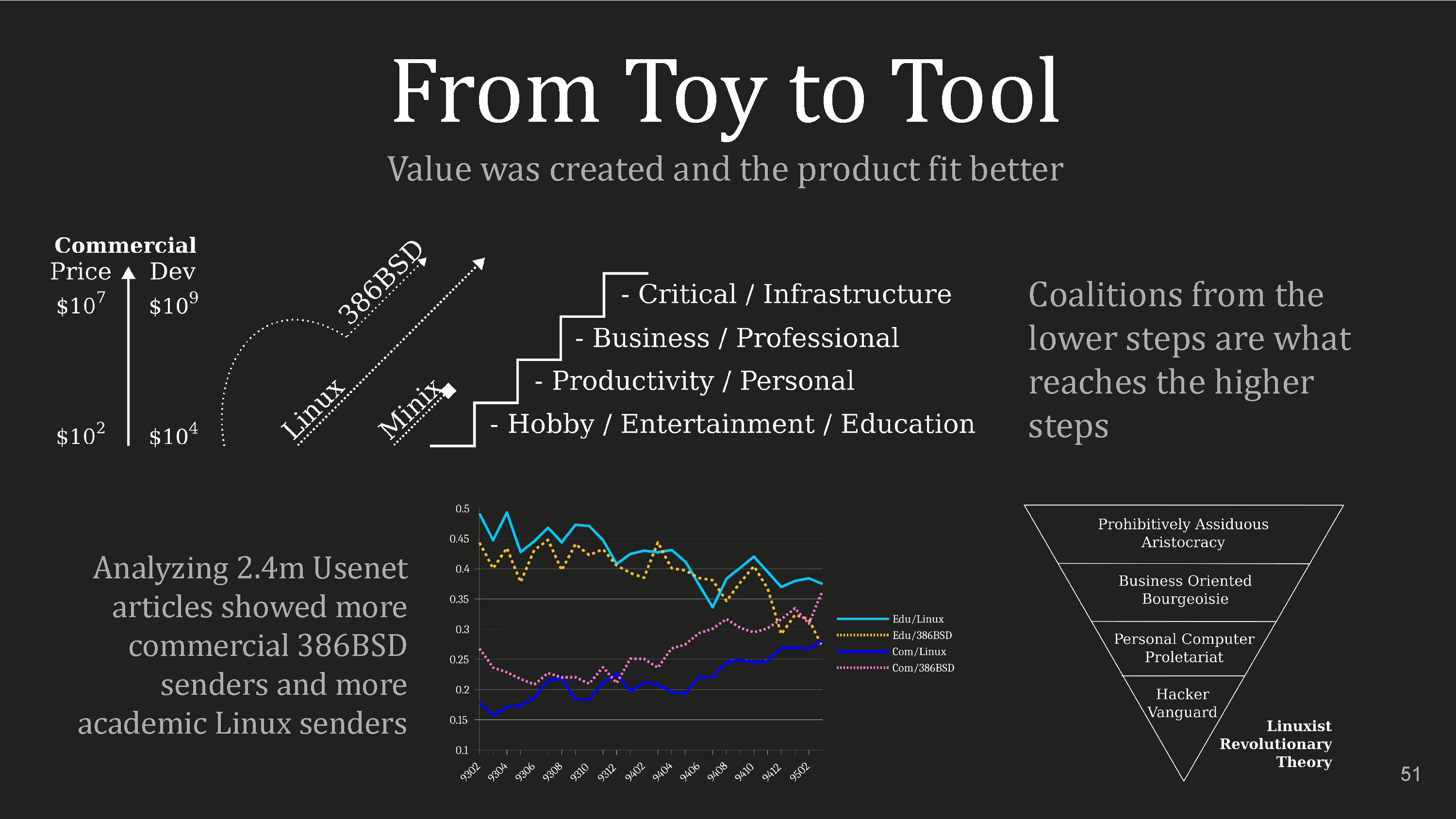

The first level here are enthusiast developers and bug fixers, followed by the curious and hobbyists, then the business needs and finally people that can’t have things fail.

Arguably one of the failings of the early BSDs is they were too lopsided on the upper steps and couldn’t get the virtuous cycle moving with enough velocity.

Analyzing email addresses of about 2.5 million USENET posts over a two year period there were almost always more commercial emails for BSD and more educational ones for Linux. So even though their general trend was ascending the staircase, Linux had more torque from the bottom groups.

I call this the Linuxist revolutionary theory. The hacker vanguard forms a coalition with the personal computer proletariat and the business oriented bourgeoisie becomes interested and finally the prohibitively assiduous aristocracy is able to use it.

There’s one last type of price: expertise.

You could assign a utility function roughly as the capabilities divided by the effort required.

Sometimes both capabilities and effort increase over time. Blender, for instance, is highly capable software but also takes a lot of expertise to use effectively. Many mobile apps on the other hand, actually remove power features over time to make the product require less effort while also making it less capable.

Still others, frameworks for example, get Fred Brooke’s second system: a new version that's harder to use and leads to inferior results, thus being the inversion of the graph and decreasing the utility of the product.

Let’s talk about the multiple arrows. To get a larger user-base you need a diversity of capabilities requiring a diversity of efforts. Linux was able to achieve this by outsourcing the actual packaging of the OS through distributions.

This is another insight about the bazaar software model. Since the entire structure is more flat and structural organizational barriers are mostly removed, as markets naturally subdivide and form, they can be quickly and readily addressed.

Because we’re doing social history, let's use critical theorist Marcuse’s idea of deradicalization: the dominant structures, say markets, subsume the novel. However, because in bazaars, people can self-organize, they beat it to the punch and move faster than a market-force which is why open source decoupled structures are more dynamic and swift-footed than commercial approaches.

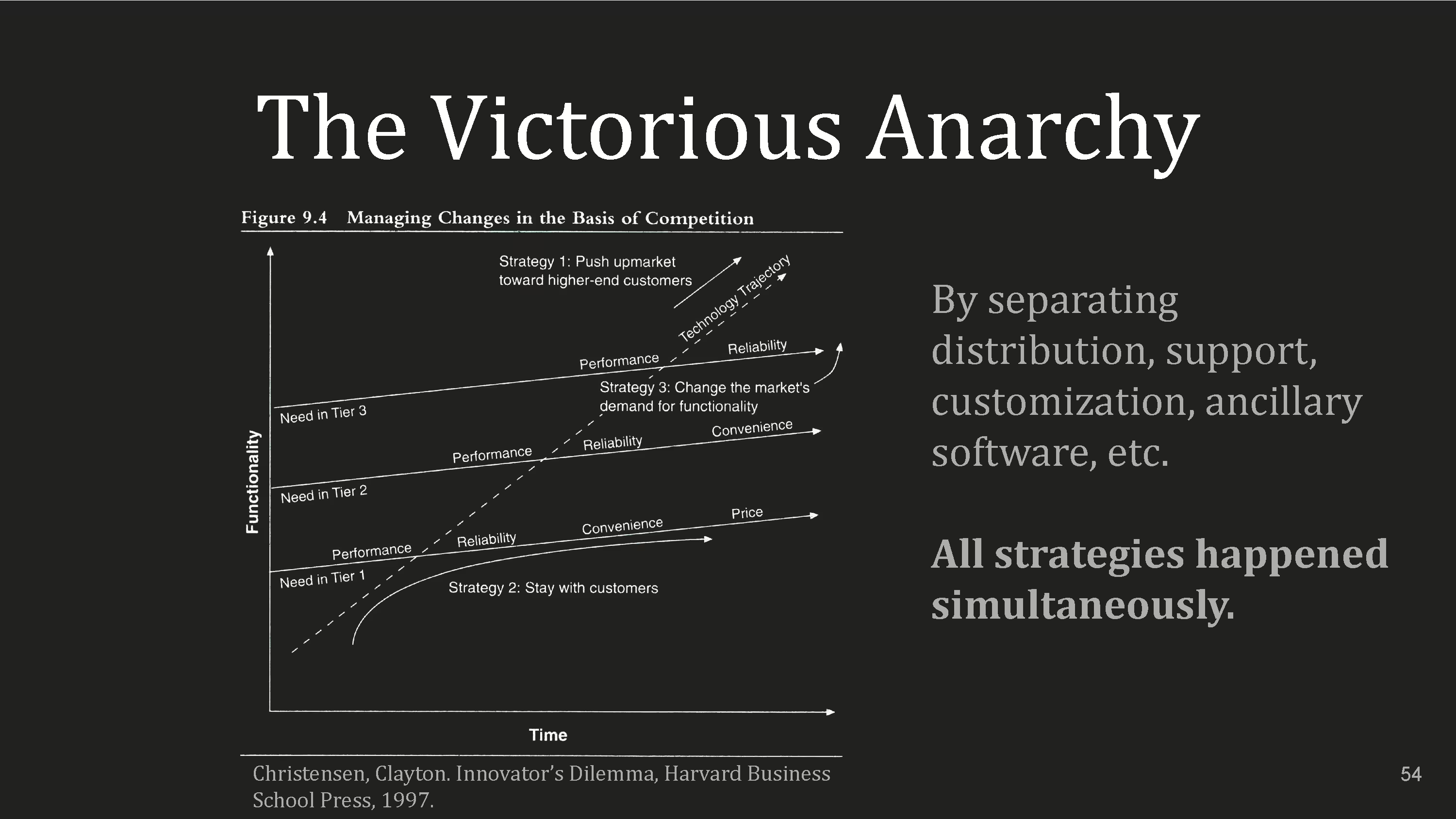

Here’s a chart from Innovator’s Dilemma describing different strategies that can be taken as technology gets better. You can push upmarket to higher end consumers or you can stay with your existing customers.

However, because of the horizontal development of Linux and the existence of distributions run by multiple organizations, it was able to do multiple strategies at once. Not only these but any other ones that have been left out of the graph.

A few slides ago we saw how Linux has a horizontalist, bazaar approach to software internally. Let’s look at the implications of this externally.

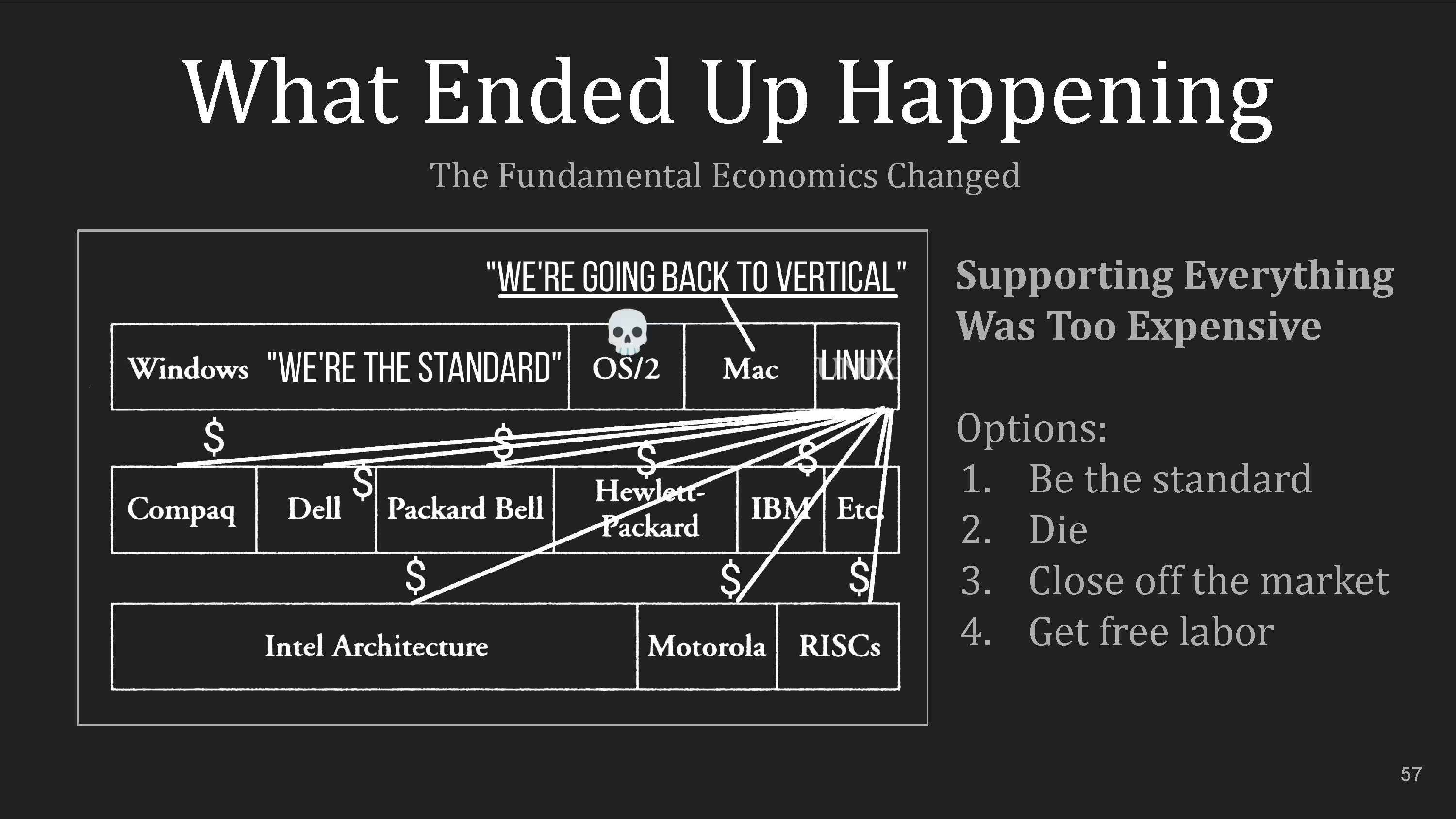

This chart is taken from Andy Grove’s 1995 text, Only the Paranoid Survive describing how the industry moved from one-stop shop verticals to a horizontal structure. If we put this in the aspirational space, this can be viewed as part of the same decoupling super structure as what the mainframes were doing in the late 1960s.

The premise of this transition was a book called The Coming Computer Industry Shakeout by Stephen Mclellan in 1984. Quoting:

As software gains ascendancy, the keywords will no longer by customization, specialization and market niche. The new word will be integration. Systems integration will be the most sophisticated and difficult task computer companies will have to undertake.

That quote being 40 years old gives us the privilege of seeing what happened.

Essentially four strategies were developed to tackle this problem. The first is to impose on the vertical by claiming you’re the standard. But in practice, only one player can do that, so OS/2 died. Apple took a different route by being its own vertical while Linux had to push the wheel out of the tarpit and needed enough people to do it.

Luckily, timing was on their side.

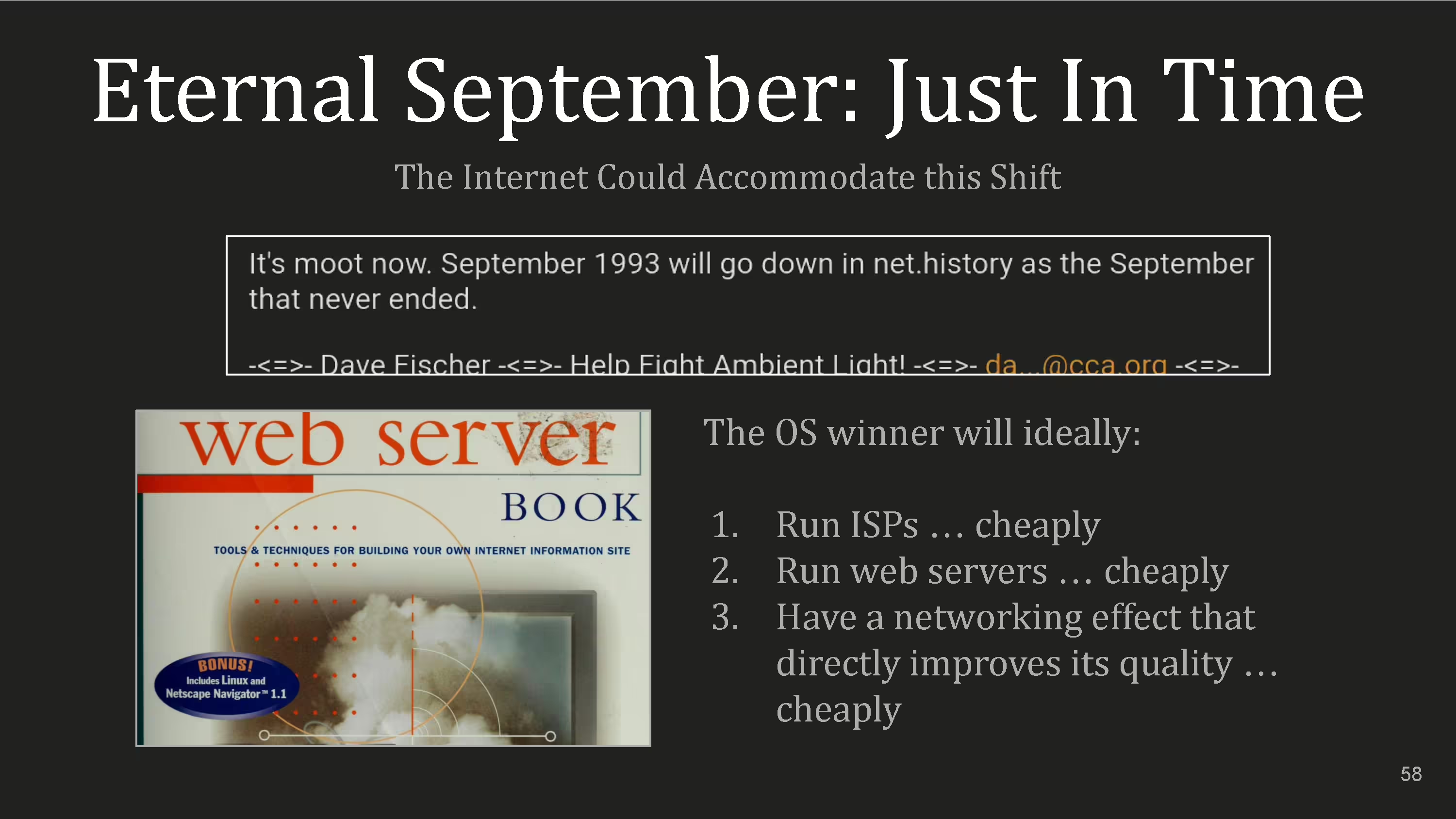

When the Internet was mostly accessed at universities, September annually brought in a flood of new people that had to be coached. As residential Internet grew, the old guard termed 1993 as The Eternal September: the beginning of the mainstreaming of Internet access.

For this to happen, cheap ISPs and web servers needed to exist quickly to support users and Linux was there to provide.

It’s important to note that Linux was prima facie hobbyist and personal use oriented at this time even though Linux Journal has articles in 1994 about it as a web server and running ISPs.

Contemporary texts such as Linux: Getting Started, Unleashing the Workstation in your PC, or Plug and Play Linux were in the lower two steps of our staircase, hobbyists and personal. The early Slackware, MCC and SLS distros from that time also didn’t include web servers or tools to run a business. The first people in the new use-cases don’t get there with clean off the shelf solutions. They play the scout role, clearing the road for the army to roll in. Which is why The Web Server Book in 1995 now includes Linux.

Another benefactor of the Eternal September is attributed in Linus’s Law: Given enough eyeballs, all bugs are shallow. Let's look at the various eyeballs.

The first “terms slide” claimed that Linux was mostly built by college students, here’s some evidence. After first releasing in October, Linux got some local help and in November it had contributions from Hong Kong, MIT and University of Utah. In December, code came from Calgary and Berkeley and then Colorado and Maryland students came in January. So far everyone is under 25 as there became an increasingly pressing need for a solution like Linux to solve new problems.

And these needs more or less persist to this day. Apple is vertical, Microsoft is entitled and Linux continues to climb stairs that have yet to be drawn. Here’s a quote by Torvalds:

I think the timing was good. Even just a year earlier, I don’t think it could have been done and a year later somebody else would have done something similar.

These two bottom charts show how Linux now dominates the supercomputer and web server space.

How’s everyone doing? Are you still awake? Yes, that’s typed on this sheet as is this. This is long so let’s have some fun. If there was a storefront named “open source” what would be displayed in the windows?

In fact, let's do some interactive theater with what looks like the kekistan flag.

We’re a group of people in 1995 trying to bring free software to the masses. Our marketing director just told us about the “marketing mix” with the four P's: product, price, place, and promotion.

In order to have a figurehead for the free software movement we need to find a piece of free software that qualifies these conditions. It should be easy to try, acquire, and understand. It should have a low price of time, money and expertise.

I appreciate the suggestion so far. These are all excellent examples of free software. I especially liked the elevator pitch for Emacs Org mode. Before we settle on a project, I'm wondering if we've left anything out.

Any suggestions from the audience? Oh yes, you sir, who I’ve known for over a decade.

Oh that’s a great idea. Maybe Linux can be the window dressing of the free software movement, or how about this, I have a better idea.

Let’s make an alt-copyright pipeline. We have low effort things for curious PC users to try. Then we gently tell them our community beliefs and encourage them to up their commitments.

They’ll become more familiar with projects which take effort and expertise, get involved and become an advocate.

Over time they’ll report bugs, help determine needs, and implement desires to move our aspirational project forward and make it more tangible.

In order to do this we must be a welcoming community. By necessity but also because we brought in the right people. We’ll call it the Jeeple’s temple.

So that’s how it happened!

Oh wait a second! How do we do it again?

The perhaps.

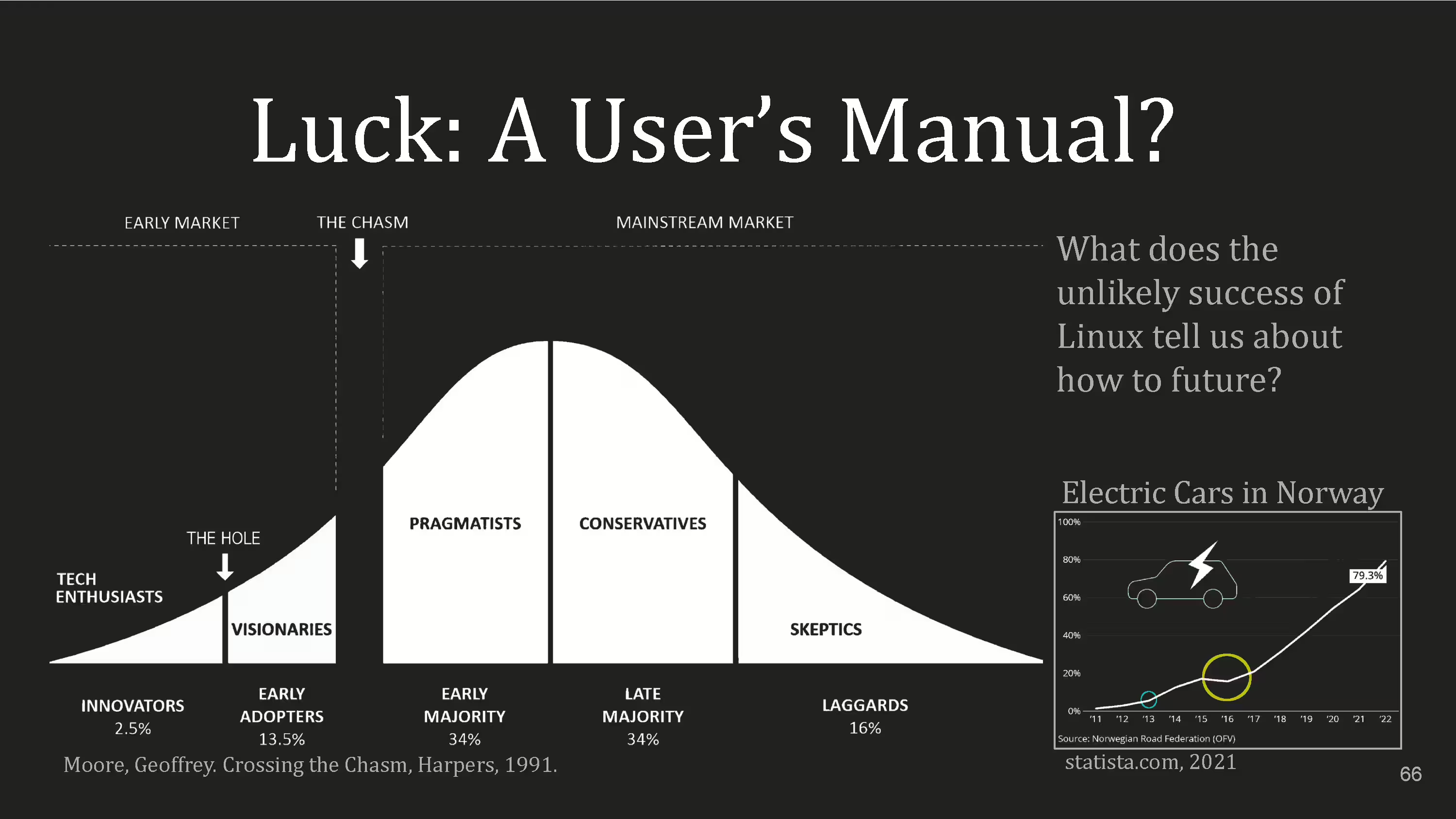

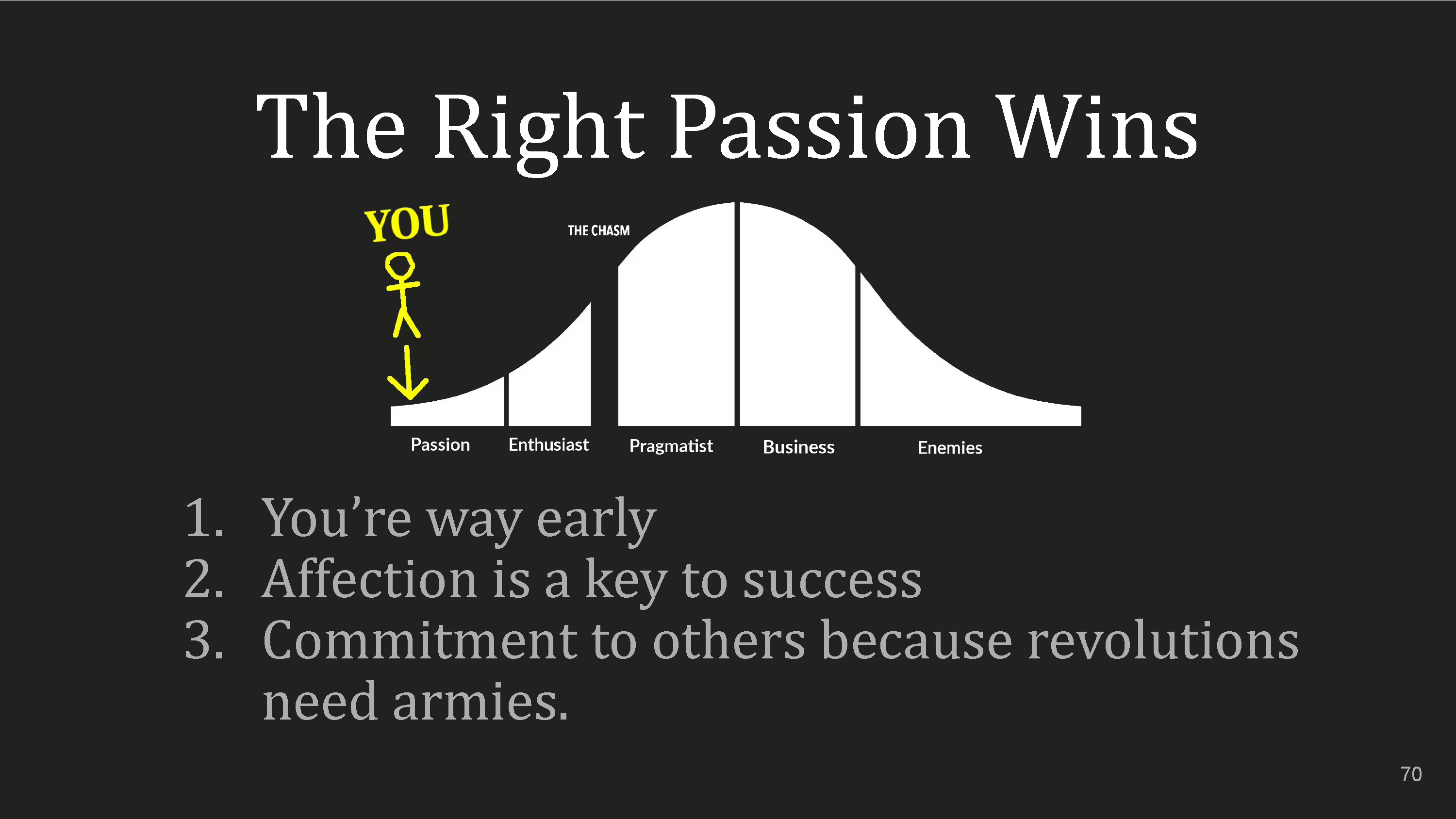

Geoffrey Moore made this graph in his work Crossing the Chasm in 1991. It proposes as commercial products mature, users with different behavior and expectations come around. There’s two holes in this graph.

The first group is people who are aspirational and have a high tolerance for impracticality. They are willing to go the extra mile to make things work. Then there’s the small hole. In the graph on the right with electric car adoption in Norway you can see that small slump, the first circle.

The next group is enthusiastic about the possibilities but prefer a lower effort, time and expertise cost. Then there’s a really big hole. That’s the yellow circle in the graph.

The next group is the difficult one to reach. They’re looking for a working product and don’t want to be hassled with inconvenience. Many products don’t make it there.

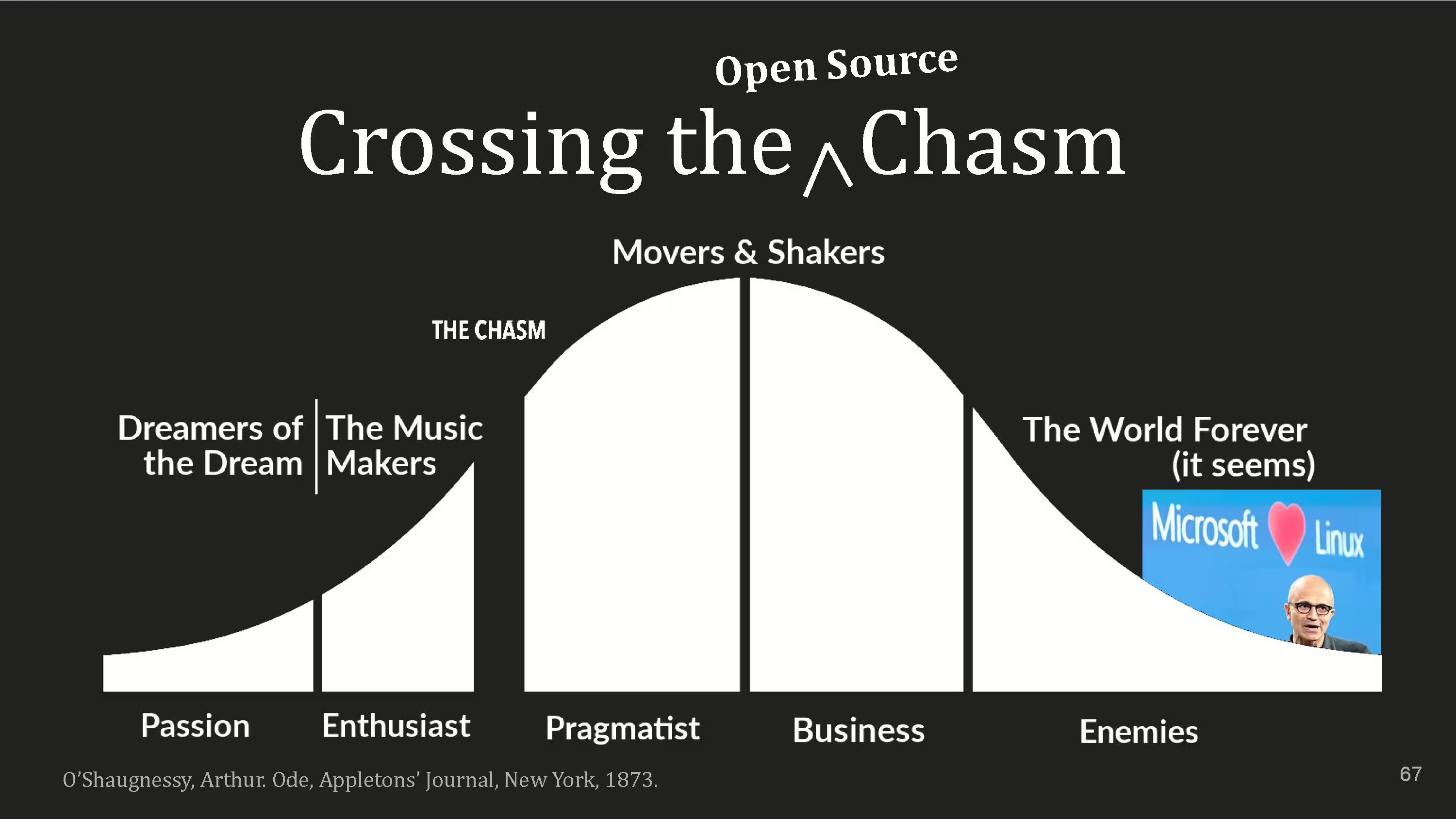

I present crossing the open source chasm.

The first group are passionate hackers trying to claw at the aspirational curve and bring it down to the bugs and needs. If they can keep their passions going long enough and make sufficient progress then perhaps, the music makers will come around and help recruit more passionate people to enlarge that base. That’s the first pipeline.

If the engine between the passionate and the enthusiasts can be maintained in order to drive the problem/solution/integration wheel, the project can get pulled out of the turing tarpit and if the software capabilities divided by the “effort demanded” yields enough of a utility ratio, then the pragmatists will become interested and the chasm is crossed.

But it’s important to keep the pipeline well lubricated because you’ll be facing new challenges and use-cases and need a few of those new users to slide along the responsibility graph in order to grow the coalition as the Law of Division takes hold.

The clarity of the present stands on both the triumph and wreckage of the past. Sometimes, for a city to become great, it must first be destroyed so that a new generation can seize the dream and try to execute it better.

Failures and the passage of time unbind us from commitments and give us permission to kill our darlings and do a page one rewrite by naturally creating the space for us to try again. But only if we retain the liberties to do so.

Here’s a few historical examples:

- Baird Televisor: an early mechanical television

- Cooke and Wheatstone telegraph predating morse code

- Arithmometer: The first practical office calculator

Each of these devices have a different history but they affected the same result: The aspirational curve eventually wasn't captured by a particular implementation. Sitting on the left side of the curve we'd like to intentionally accelerate the social technical processes. But it's a counterintuitive art, otherwise it'd be easy.

Strong IP enforcement on strong visions diminish their chance of success. You must let go of that which you love the most. You may get the vision right, execution, timing or team right, but probably not all at once. Facebook, iPhone, Google, Amazon, they’re all iterative executions of larger ideas owned by nobody. And arguably failing on one or more of these fronts today.

If you’re working on a thing or have a dream and if it’s just you, then you are definitionally on the very left of the graph.

You could argue for certain use-cases, free software took over 20 years to cross the big chasm and for others, desktop publishing, digital audio workstation, photo editing, or social media, the free software chasm is still not crossed.

There needs to be a certain kind of affection, for the people, their needs, desires, and aspirations. For these to eventually succeed, for say, the Gimp to overtake Photoshop, the commitment to others, with less responsibility is key, because that’s how you get the new recruits.

Whatever you’re working on, it will take longer and manifest differently than you thought. Don’t have codependencies with it. It’ll foster resentment, anxiety, and low self-esteem. Really, you’ll probably become susceptible to the Great Man Theory. If something is dying, maybe that’s the fire you need to rebuild things. Listen to the needs and desires of the idea.

- Do one thing and do it well

- Work Together

- Handle text streams because that’s a universal interface.

This is actually the UNIX Philosophy by Peter Salus. But I’m serious about it. Good software advice is also good people advice when building software.

Let’s go to Tracy Kidder, Soul of a New Machine 1981:

Looking into the VAX, West had imagined he saw a diagram of DEC’s corporate organization. He felt that VAX was too complicated. He did not like the system by which various parts of the machine communicated with each other; there was too much protocol involved. He decided that VAX embodied flaws in DEC’s corporate organization. The machine expressed that phenomenally successful company’s cautious, bureaucratic style.

Churchill said, "We shape buildings and thereafter they shape us". However our buildings are different because the programmer, like the poet, works only slightly removed from pure thought-stuff. He builds his castles in the air, from air, creating by exertion of the imagination or so says Fred Brooks. Regardless, wrecking balls for code are cheaper than those for concrete so don’t be afraid of using them.

Here’s another UNIX Philosophy. This time think about it in terms of people.

- Make it easy to write, test, and run organizational efforts.

- Interactive use with humans instead of batch processing

- Make do with smaller teams

- You’ll need all the engines to get over the chasms and move the projects forward

Organizations which design systems are constrained to produce designs which are copies of the communication structures of these organizations. Melvin Conway, Datamation, April 1967.

We study yesterday to create a better tomorrow. The future is ours to make.